Linear Regression: Model Selection

Model Selection

Often when we are developing a linear regression model, part of our goal is to explain a relationship. Now, we will ignore much of what we have learned and instead simply use regression as a tool to predict. Instead of a model which explains relationships, we seek a model which minimizes errors.

First, note that a linear model is one of many methods used in regression.

To discuss linear models in the context of prediction, we introduce the (very boring) Advertising data that is discussed in the ISL text (see supplemental readings). It can be found at https://www.statlearning.com/s/Advertising.csv.

Advertising <- read_csv('https://www.statlearning.com/s/Advertising.csv')## New names:

## Rows: 200 Columns: 5

## ── Column specification

## ──────────────────────────────────────────────────────── Delimiter: "," dbl

## (5): ...1, TV, radio, newspaper, sales

## ℹ Use `spec()` to retrieve the full column specification for this data. ℹ

## Specify the column types or set `show_col_types = FALSE` to quiet this message.

## • `` -> `...1`Advertising = Advertising %>%

dplyr::select(TV, radio, newspaper, sales)

Advertising## # A tibble: 200 × 4

## TV radio newspaper sales

## <dbl> <dbl> <dbl> <dbl>

## 1 230. 37.8 69.2 22.1

## 2 44.5 39.3 45.1 10.4

## 3 17.2 45.9 69.3 9.3

## 4 152. 41.3 58.5 18.5

## 5 181. 10.8 58.4 12.9

## 6 8.7 48.9 75 7.2

## 7 57.5 32.8 23.5 11.8

## 8 120. 19.6 11.6 13.2

## 9 8.6 2.1 1 4.8

## 10 200. 2.6 21.2 10.6

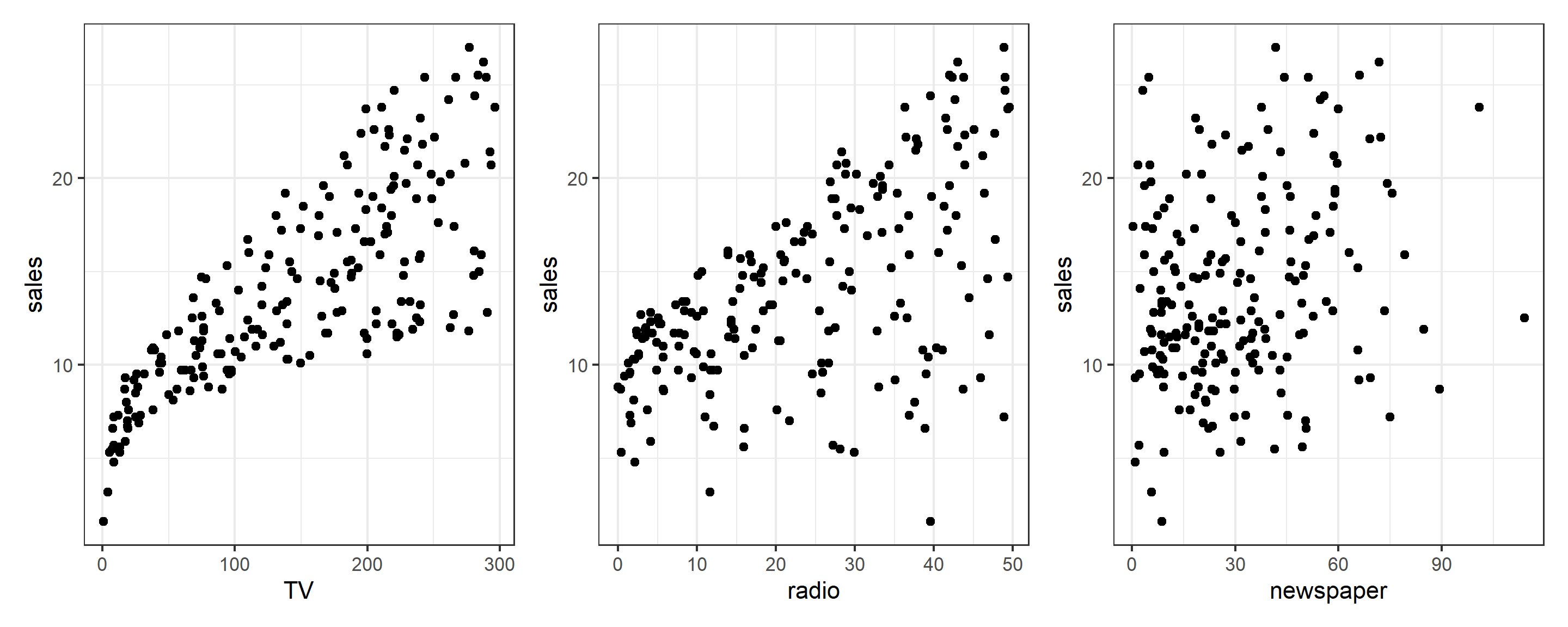

## # ℹ 190 more rowsWe can take a look at the relationship between sales and the three modes of advertising using the patchwork package (or any number of other ways)

library(patchwork)

tv = ggplot(Advertising, aes(x = TV, y = sales)) + geom_point() + theme_bw()

rad = ggplot(Advertising, aes(x = radio, y = sales)) + geom_point() + theme_bw()

news = ggplot(Advertising, aes(x = newspaper, y = sales)) + geom_point() + theme_bw()

tv + rad + news

Here we see a relationship between each of the advertising modes and sales, but we might want to know more. Specifically, we probably want to know something about the sales-maximizing combination of the three modes. For that, we’d need a good model of sales. But what does “good” mean?

Assesing Model Accuracy

There are many metrics to assess the accuracy of a regression model. Most of these measure in some way the average error that the model makes. The metric that we will be most interested in is the root-mean-square error.

While for the sake of comparing models, the choice between RMSE and MSE is arbitrary, we have a preference for RMSE, as it has the same units as the response variable. Also, notice that in the prediction context MSE refers to an average, whereas in an ANOVA (summary(lm(...))) context, the denominator for MSE may not be

For a linear model , the estimate of

We can write an R function that will be useful for performing this calculation.

rmse = function(actual, predicted) {

sqrt(mean((actual - predicted) ^ 2))

}Model Complexity

Aside from how well a model predicts, we will also be very interested in the complexity (flexibility) of a model. For now, we will only consider nested linear models for simplicity. What is a “nested model”? When you have “nested models”, then one model contains all of the same terms that the other model has. If we have TV, Radio, Newspaper, then we would have a “nested model” in this case:

model1 = lm(sales ~ TV + radio, data = Advertising)

model2 = lm(sales ~ TV + radio + newspaper, data = Advertising)Here, model1 is nested in model2. Here are non-nested models:

model1 = lm(sales ~ TV + radio, data = Advertising)

model2 = lm(sales ~ TV + newspaper, data = Advertising)When we add polynomial terms, we always add the lower-order terms as well. This will always make a nested model:

model1 = lm(sales ~ TV + radio, data = Advertising)

model2 = lm(sales ~ TV + TV^2 + radio + radio^2, data = Advertising)

model3 = lm(sales ~ TV + TV^2 + radio + radio^2 + TV:radio, data = Advertising)Those are nested models – 1 and 2 use a subset of terms from 3, and 1 uses a subset of 2.

When models are nested, then the more predictors that a model has, the more complex the model. For the sake of assigning a numerical value to the complexity of a linear model, we will use the number of predictors,

We write a simple R function to extract this information from a model.

get_complexity = function(model) {

length(coef(model)) - 1

}Test-Train Split

There is an issue with fitting a model to all available data then using RMSE to determine how well the model predicts. It is essentially cheating! As a linear model becomes more complex, the RSS, thus RMSE, can never go up. It will only go down, or in very specific cases, stay the same.

This would suggest that to predict well, we should use the largest possible model! However, in reality we have hard fit to a specific dataset, but as soon as we see new data, a large model may in fact predict poorly. This is called overfitting.

Overfitting in action

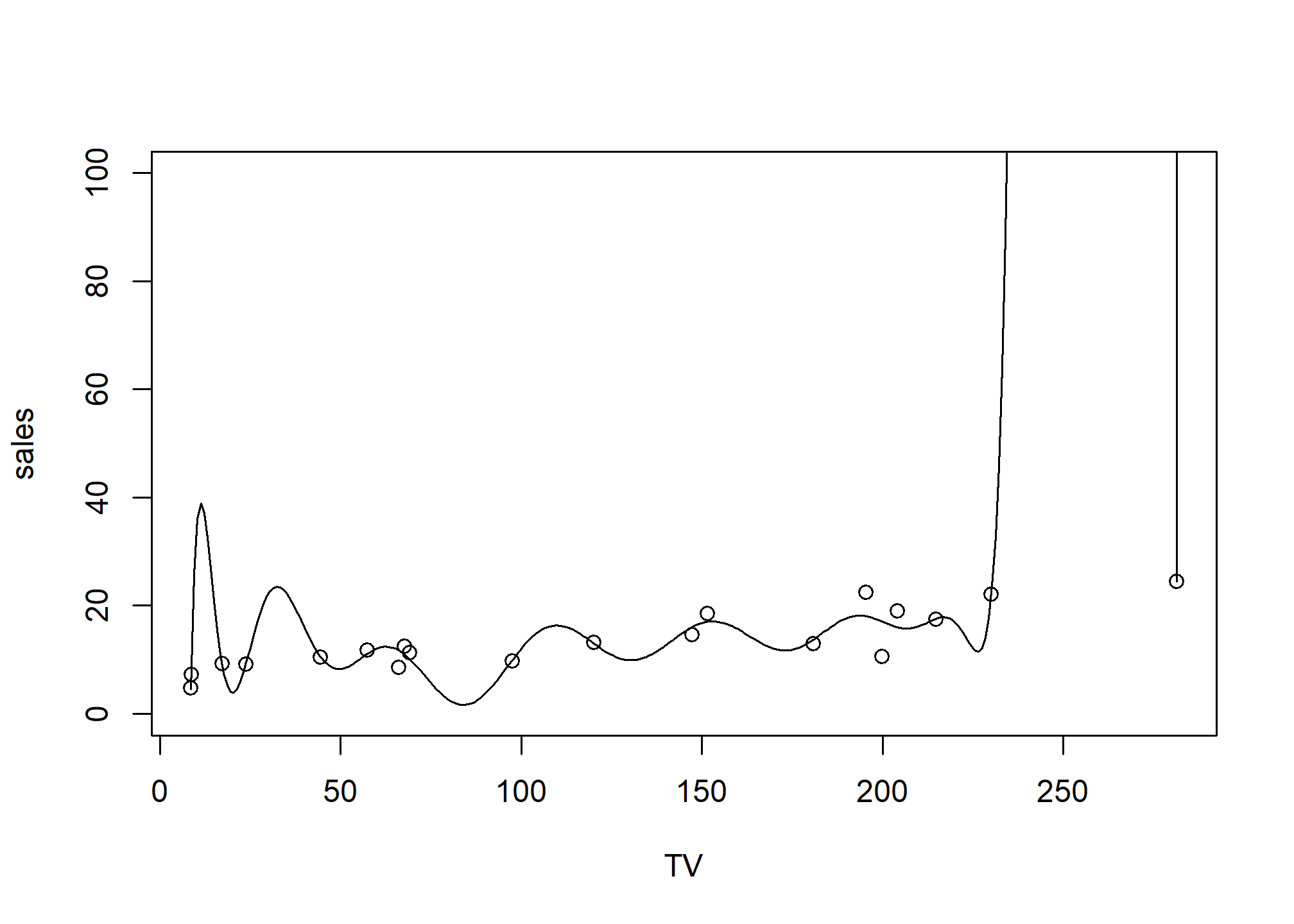

Let’s take a quick look at why overfitting may harm us, despite the notion that we want to minimize RMSE. I’m going to take a 20-row subset of Advertising and fit a 16th-degree polynomial. If you remember your mathematics training, an Nth degree polynomial has N-1 “inflection points”, which translates to fitting a curve with 15 inflections. That’s pretty flexible! R is smart and won’t let us fit a 20-degree polynomial, though. Here’s as close as we can get:

smallset = Advertising %>% slice(1:20)

flexible.lm = lm(sales ~ poly(TV, 16), smallset)

summary(flexible.lm)##

## Call:

## lm(formula = sales ~ poly(TV, 16), data = smallset)

##

## Residuals:

## 1 2 3 4 5 6 7

## 6.804e-03 -1.362e-01 -8.586e-02 1.154e+00 -7.661e-01 9.626e-01 5.371e-01

## 8 9 10 11 12 13 14

## 1.509e-01 -9.328e-01 -7.052e+00 -1.591e+00 -2.264e-01 8.249e-02 -1.121e-01

## 15 16 17 18 19 20

## 3.317e+00 4.554e+00 7.361e-02 -1.741e-06 1.143e+00 -1.078e+00

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 13.4950 1.2288 10.982 0.00162 **

## poly(TV, 16)1 20.4353 5.4954 3.719 0.03384 *

## poly(TV, 16)2 1.6908 5.4954 0.308 0.77845

## poly(TV, 16)3 2.4704 5.4954 0.450 0.68354

## poly(TV, 16)4 -1.2439 5.4954 -0.226 0.83547

## poly(TV, 16)5 0.9185 5.4954 0.167 0.87789

## poly(TV, 16)6 -3.9055 5.4954 -0.711 0.52854

## poly(TV, 16)7 -2.2700 5.4954 -0.413 0.70730

## poly(TV, 16)8 0.2120 5.4954 0.039 0.97165

## poly(TV, 16)9 1.4021 5.4954 0.255 0.81510

## poly(TV, 16)10 -2.3341 5.4954 -0.425 0.69965

## poly(TV, 16)11 -1.1539 5.4954 -0.210 0.84713

## poly(TV, 16)12 -0.6833 5.4954 -0.124 0.90891

## poly(TV, 16)13 1.2426 5.4954 0.226 0.83564

## poly(TV, 16)14 1.5138 5.4954 0.275 0.80084

## poly(TV, 16)15 2.0444 5.4954 0.372 0.73461

## poly(TV, 16)16 -1.8923 5.4954 -0.344 0.75331

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 5.495 on 3 degrees of freedom

## Multiple R-squared: 0.8385, Adjusted R-squared: -0.02313

## F-statistic: 0.9732 on 16 and 3 DF, p-value: 0.5933Looks great - we are explaining a lot of the variation in sales! Let’s plot this 16-degree polynomial:

# Plot a curve using predict over a very fine set of values

plotseq = seq(from = min(smallset$TV), to = max(smallset$TV), length = 300)

predseq = predict(flexible.lm, newdata = data.frame(TV = plotseq))

plot(sales ~ TV, smallset, ylim = c(0, 100))

lines(y = predseq, x = plotseq)

and this model has complexity of:

get_complexity(flexible.lm)## [1] 16But we don’t really believe that TV advertising of around 20 would result in almost 40 in sales. We certainly don’t trust that huge spike at 250, either! But how do we stop ourselves from overfitting?

Avoiding overfitting

Frequently we will take a dataset of interest and split it in two. One part of the datasets will be used to fit (train) a model, which we will call the training data. The remainder of the original data will be used to assess how well the model is predicting, which we will call the test data. Test data should never be used to train a model.

Note that sometimes the terms evaluation set and test set are used interchangeably. We will give somewhat specific definitions to these later. For now we will simply use a single test set for a training set.

Here we use the sample() function to obtain a random sample of the rows of the original data. We then use those row numbers (and remaining row numbers) to split the data accordingly. Notice we used the set.seed() function to allow use to reproduce the same random split each time we perform this analysis.

set.seed(90)

num_obs = nrow(Advertising)

train_index = sample(num_obs, size = trunc(0.50 * num_obs))

train_data = Advertising[train_index, ]

test_data = Advertising[-train_index, ]We will look at two measures that assess how well a model is predicting, the train RMSE and the test RMSE.

Here

Here

We will start with the simplest possible linear model, that is, a model with no predictors.

fit_0 = lm(sales ~ 1, data = train_data)

get_complexity(fit_0)## [1] 0# train RMSE

sqrt(mean((train_data$sales - predict(fit_0, train_data)) ^ 2))## [1] 5.224106# test RMSE

sqrt(mean((test_data$sales - predict(fit_0, test_data)) ^ 2))## [1] 5.211881The previous two operations obtain the train and test RMSE. Since these are operations we are about to use repeatedly, we should use the function that we happen to have already written.

# train RMSE

rmse(actual = train_data$sales, predicted = predict(fit_0, train_data))## [1] 5.224106# test RMSE

rmse(actual = test_data$sales, predicted = predict(fit_0, test_data))## [1] 5.211881This function can actually be improved for the inputs that we are using. We would like to obtain train and test RMSE for a fitted model, given a train or test dataset, and the appropriate response variable.

get_rmse = function(model, data, response) {

rmse(actual = subset(data, select = response, drop = TRUE),

predicted = predict(model, data))

}By using this function, our code becomes easier to read, and it is more obvious what task we are accomplishing.

get_rmse(model = fit_0, data = train_data, response = "sales") # train RMSE## [1] 5.224106get_rmse(model = fit_0, data = test_data, response = "sales") # test RMSE## [1] 5.211881Adding Flexibility to Linear Models

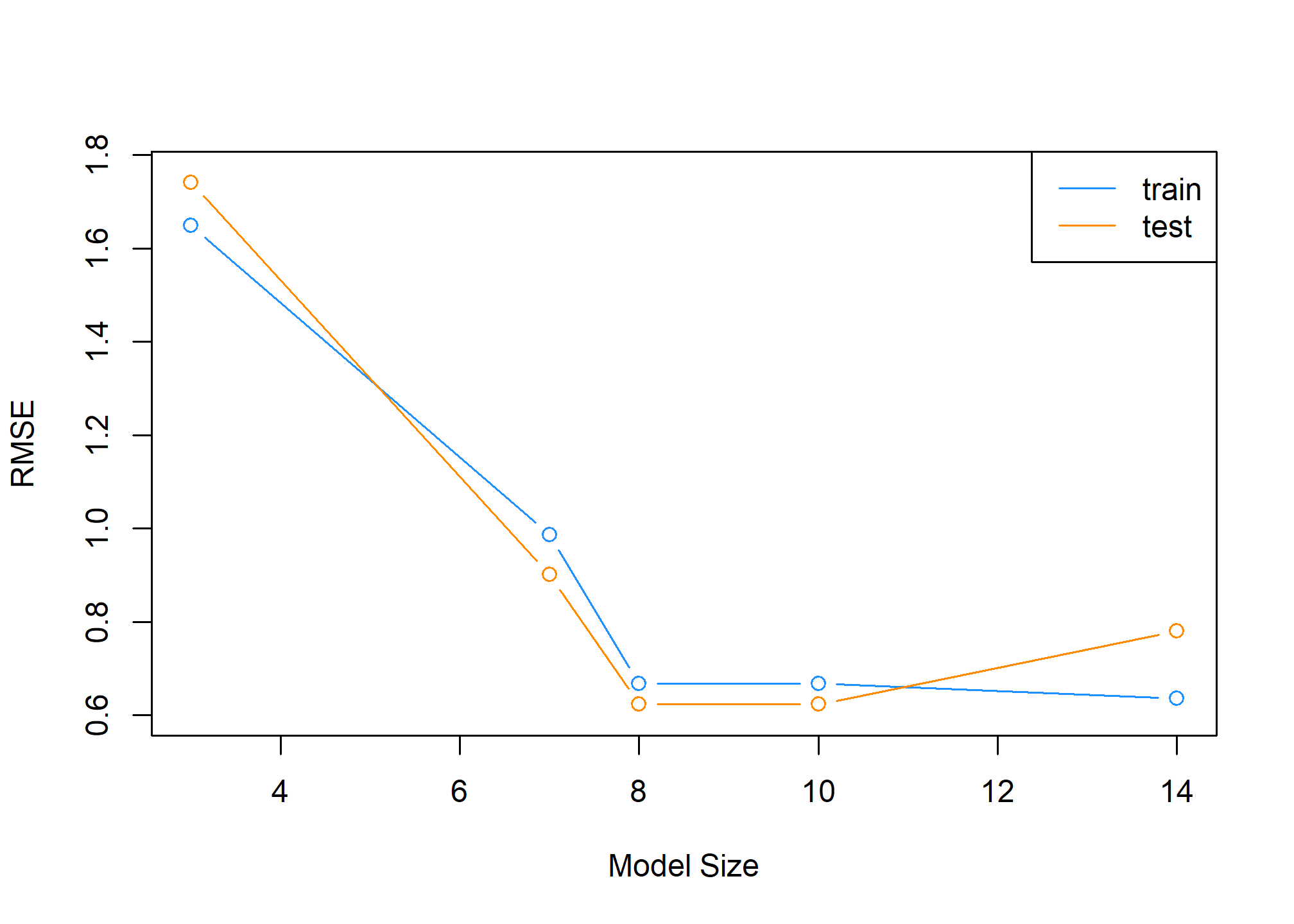

Each successive model we fit will be more and more flexible using both interactions and polynomial terms. We will see the training error decrease each time the model is made more flexible. We expect the test error to decrease a number of times, then eventually start going up, as a result of overfitting.

fit_1 = lm(sales ~ ., data = train_data)

get_complexity(fit_1)## [1] 3get_rmse(model = fit_1, data = train_data, response = "sales") # train RMSE## [1] 1.649346get_rmse(model = fit_1, data = test_data, response = "sales") # test RMSE## [1] 1.741642fit_2 = lm(sales ~ radio * newspaper * TV, data = train_data)

get_complexity(fit_2)## [1] 7get_rmse(model = fit_2, data = train_data, response = "sales") # train RMSE## [1] 0.9875248get_rmse(model = fit_2, data = test_data, response = "sales") # test RMSE## [1] 0.9014459fit_3 = lm(sales ~ radio * newspaper * TV + I(TV ^ 2), data = train_data)

get_complexity(fit_3)## [1] 8get_rmse(model = fit_3, data = train_data, response = "sales") # train RMSE## [1] 0.6685005get_rmse(model = fit_3, data = test_data, response = "sales") # test RMSE## [1] 0.6242491fit_4 = lm(sales ~ radio * newspaper * TV +

I(TV ^ 2) + I(radio ^ 2) + I(newspaper ^ 2), data = train_data)

get_complexity(fit_4)## [1] 10get_rmse(model = fit_4, data = train_data, response = "sales") # train RMSE## [1] 0.6676294get_rmse(model = fit_4, data = test_data, response = "sales") # test RMSE## [1] 0.6232819fit_5 = lm(sales ~ radio * newspaper * TV +

I(TV ^ 2) * I(radio ^ 2) * I(newspaper ^ 2), data = train_data)

get_complexity(fit_5)## [1] 14get_rmse(model = fit_5, data = train_data, response = "sales") # train RMSE## [1] 0.6359041get_rmse(model = fit_5, data = test_data, response = "sales") # test RMSE## [1] 0.7809674Choosing a Model

To better understand the relationship between train RMSE, test RMSE, and model complexity, we summarize our results, as the above is somewhat cluttered.

First, we recap the models that we have fit.

fit_1 = lm(sales ~ ., data = train_data)

fit_2 = lm(sales ~ radio * newspaper * TV, data = train_data)

fit_3 = lm(sales ~ radio * newspaper * TV + I(TV ^ 2), data = train_data)

fit_4 = lm(sales ~ radio * newspaper * TV +

I(TV ^ 2) + I(radio ^ 2) + I(newspaper ^ 2), data = train_data)

fit_5 = lm(sales ~ radio * newspaper * TV +

I(TV ^ 2) * I(radio ^ 2) * I(newspaper ^ 2), data = train_data)Next, we create a list of the models fit.

model_list = list(fit_1, fit_2, fit_3, fit_4, fit_5)We then obtain train RMSE, test RMSE, and model complexity for each.

train_rmse = sapply(model_list, get_rmse, data = train_data, response = "sales")

test_rmse = sapply(model_list, get_rmse, data = test_data, response = "sales")

model_complexity = sapply(model_list, get_complexity)We then plot the results. The train RMSE can be seen in blue, while the test RMSE is given in orange.

plot(model_complexity, train_rmse, type = "b",

ylim = c(min(c(train_rmse, test_rmse)) - 0.02,

max(c(train_rmse, test_rmse)) + 0.02),

col = "dodgerblue",

xlab = "Model Size",

ylab = "RMSE")

lines(model_complexity, test_rmse, type = "b", col = "darkorange")

legend('topright', legend = c('train','test'), col = c('dodgerblue','darkorange'), lty=1)

We also summarize the results as a table. fit_1 is the least flexible, and fit_5 is the most flexible. We see the Train RMSE decrease as flexibility increases. We see that the Test RMSE is smallest for fit_3, thus is the model we believe will perform the best on future data not used to train the model. Note this may not be the best model, but it is the best model of the models we have seen in this example.

| Model | Train RMSE | Test RMSE | Predictors |

|---|---|---|---|

fit_1 |

1.6493463 | 1.7416416 | 3 |

fit_2 |

0.9875248 | 0.9014459 | 7 |

fit_3 |

0.6685005 | 0.6242491 | 8 |

fit_4 |

0.6676294 | 0.6232819 | 10 |

fit_5 |

0.6359041 | 0.7809674 | 14 |

To summarize:

- Underfitting models: In general High Train RMSE, High Test RMSE. Seen in

fit_1andfit_2. - Overfitting models: In general Low Train RMSE, High Test RMSE. Seen in

fit_4andfit_5.

Specifically, we say that a model is overfitting if there exists a less complex model with lower Test RMSE. Then a model is underfitting if there exists a more complex model with lower Test RMSE.

A number of notes on these results:

- The labels of under and overfitting are relative to the best model we see,

fit_3. Any model more complex with higher Test RMSE is overfitting. Any model less complex with higher Test RMSE is underfitting. - The train RMSE is guaranteed to follow this non-increasing pattern. The same is not true of test RMSE. Here we see a nice U-shaped curve. There are theoretical reasons why we should expect this, but that is on average. Because of the randomness of one test-train split, we may not always see this result. Re-perform this analysis with a different seed value and the pattern may not hold. We will discuss why we expect this next chapter. We will discuss how we can help create this U-shape much later.

- Often we expect train RMSE to be lower than test RMSE. Again, due to the randomness of the split, you may get lucky and this will not be true.

A final note on the analysis performed here; we paid no attention whatsoever to the “assumptions” of a linear model. We only sought a model that predicted well, and paid no attention to a model for explaination. Hypothesis testing did not play a role in deciding the model, only prediction accuracy. Collinearity? We don’t care. Assumptions? Still don’t care. Diagnostics? Never heard of them. (These statements are a little over the top, and not completely true, but just to drive home the point that we only care about prediction. Often we latch onto methods that we have seen before, even when they are not needed.)