Probability and Statistics

- Required Reading

- Groups

- Yesterday’s Lab (Lab 03)

- Discrete probability

- Relative frequency

- Notation

- Probability distributions

- Monte Carlo simulations for categorical data

- Independence

- Conditional probabilities

- Addition and multiplication rules

- Combinations and permutations

- Examples

- Infinity in practice

- Theoretical continuous distributions

- Monte Carlo simulations for continuous variables

- Continuous distributions

- Random variables

Groups

Everyone has been assigned to a group. If you did not get a group assignment yesterday, let me know right now.

Yesterday’s Lab (Lab 03)

Let’s go over a few helpful things for future labs. First off, we can load a .csv straight from the web (though it is very good practice to download the file and use it locally if you’re not sure if the file will exist in the future. Reproducibility of research is a big deal!

unemployment = read.csv('https://ssc442kirkpatrick.netlify.app/projects/03-lab/data/unemployment.csv')

str(unemployment)## 'data.frame': 6732 obs. of 8 variables:

## $ year : int 2016 2016 2016 2016 2016 2016 2016 2016 2016 2016 ...

## $ state : chr "Alabama" "Alabama" "Alabama" "Alabama" ...

## $ month : int 12 11 10 9 8 7 6 5 4 3 ...

## $ month_name : chr "December" "November" "October" "September" ...

## $ unemployment: num 5.7 5.8 5.9 5.9 5.8 5.8 5.8 5.8 5.9 5.9 ...

## $ date : chr "2016-12-01" "2016-11-01" "2016-10-01" "2016-09-01" ...

## $ region : chr "South" "South" "South" "South" ...

## $ division : chr "East South Central" "East South Central" "East South Central" "East South Central" ...Note that I used read.csv and not read_csv which is part of the tidyverse/dplyr ecosystem. read_csv tries to be helpful and will parse your data for conversions – it will find your date column and will turn it into a Date format, which we aren’t working with yet.

library(tidyverse)

str(read_csv('https://ssc442kirkpatrick.netlify.app/projects/03-lab/data/unemployment.csv'))## spc_tbl_ [6,732 × 8] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## $ year : num [1:6732] 2016 2016 2016 2016 2016 ...

## $ state : chr [1:6732] "Alabama" "Alabama" "Alabama" "Alabama" ...

## $ month : num [1:6732] 12 11 10 9 8 7 6 5 4 3 ...

## $ month_name : chr [1:6732] "December" "November" "October" "September" ...

## $ unemployment: num [1:6732] 5.7 5.8 5.9 5.9 5.8 5.8 5.8 5.8 5.9 5.9 ...

## $ date : Date[1:6732], format: "2016-12-01" "2016-11-01" ...

## $ region : chr [1:6732] "South" "South" "South" "South" ...

## $ division : chr [1:6732] "East South Central" "East South Central" "East South Central" "East South Central" ...

## - attr(*, "spec")=

## .. cols(

## .. year = col_double(),

## .. state = col_character(),

## .. month = col_double(),

## .. month_name = col_character(),

## .. unemployment = col_double(),

## .. date = col_date(format = ""),

## .. region = col_character(),

## .. division = col_character()

## .. )

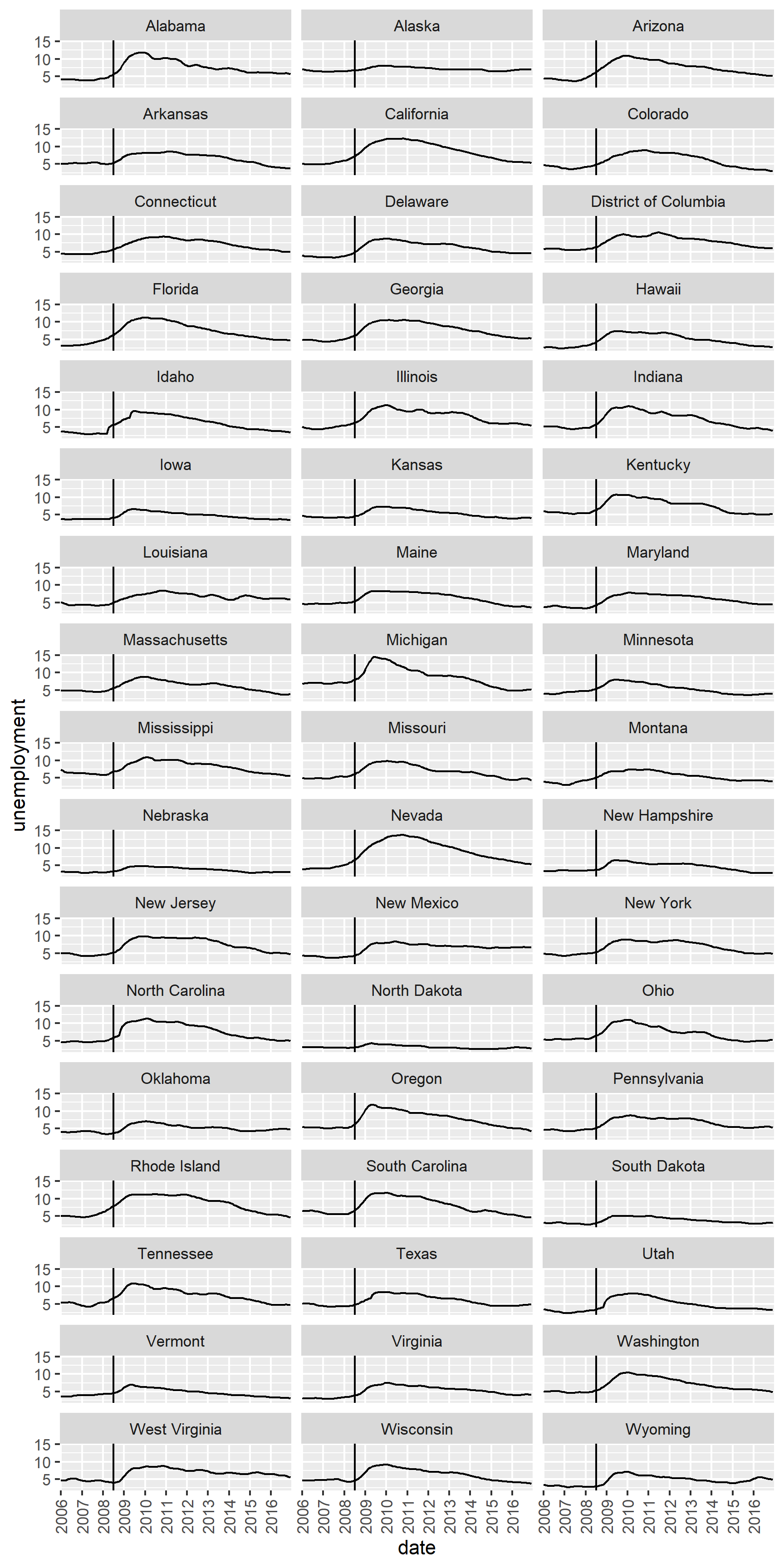

## - attr(*, "problems")=<externalptr>A large part of the challenge of Exercise 1 was getting all of the important elements legible. A few tips to make this happen, drawn from previous lectures:

facet_wraplets you choose the number of columns. No reason we need to have the default 10 columns. Fewer columns = more room for the x-axis- We know how to set the

breaksandlabelsfor the x-axis usingscale_x_discrete() - If each plot has a common x-axis, then

facet_wrapwill only put the axis labels on the bottom row. If you usedscale = 'free_x'then you’ll have a lot of extra x axis labels. - Set the

fig.widthorout.widthto ‘95%’ and let the height be determined by the plot

p = ggplot(unemployment, aes(x = date, y = unemployment, group = state)) +

geom_line() +

facet_wrap(vars(state), ncol = 3) +

scale_x_discrete(breaks = paste0(2006:2016, '-01-01'),

labels = 2006:2016) +

theme(axis.text.x = element_text(angle = 90, vjust = .5, hjust = 1)) +

geom_vline(xintercept = '2008-07-01')

p

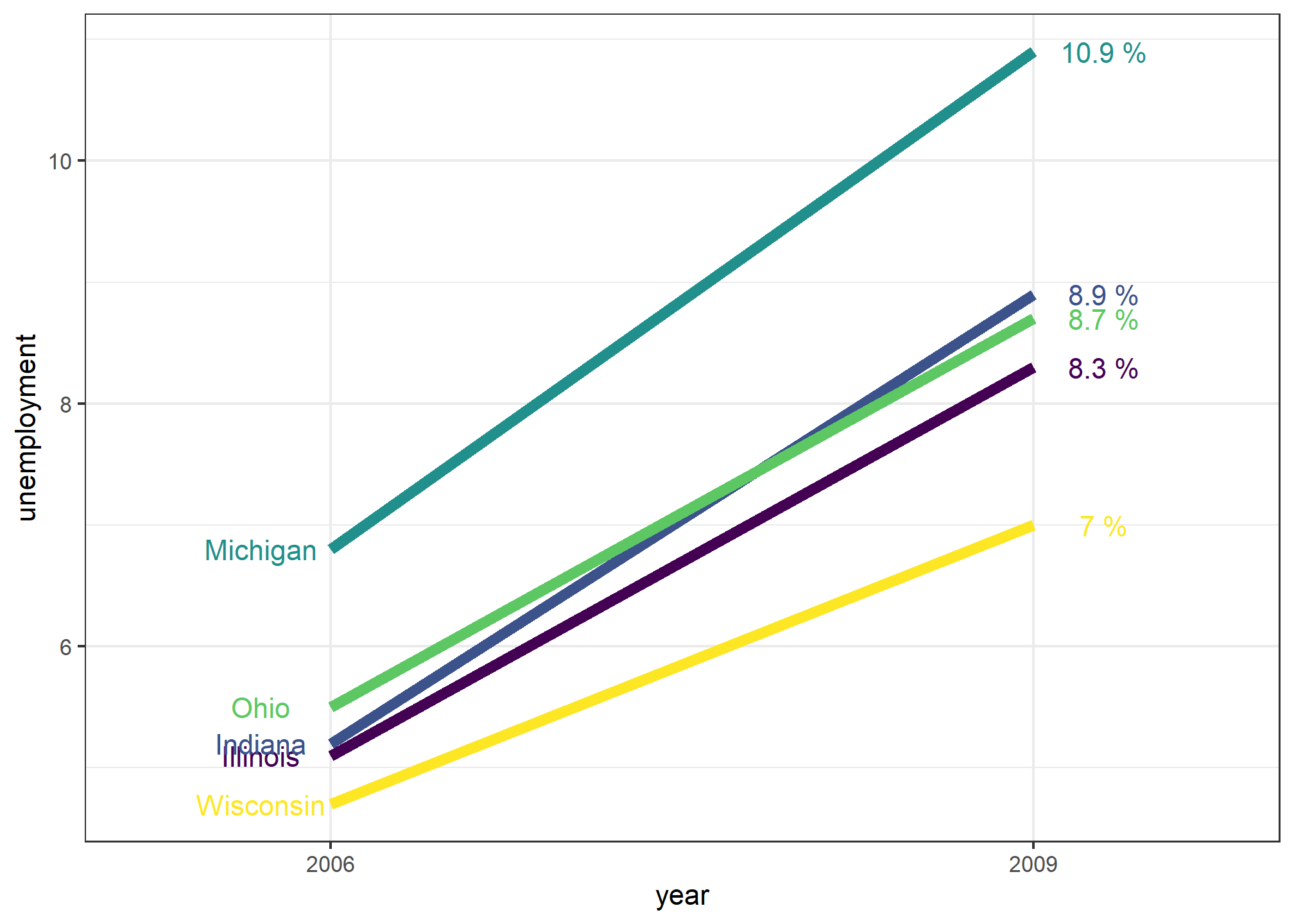

For Exercise 2, many of you ran into an issue where the x-axis limits went far beyond the two years we wanted to use (2006, 2009). I’m still not certain exactly where this issue came from, but we can always set the “window” for the plot using coord_cartesian(xlim = c(2006, 2009))

unemployment %>%

dplyr::filter(year %in% c(2006, 2009) & division %in% c('East North Central')) %>%

dplyr::filter(month==1) %>%

dplyr::mutate(year = as.factor(year)) %>%

ggplot(aes(x = year, y = unemployment, group = state, col = state)) +

geom_line(size = 2) +

geom_text(data = unemployment %>% filter(year == 2006 & division %in% c('East North Central') & month==1),

aes(x = '2006', y = unemployment, label =state),

nudge_x = -.10) +

geom_text(data = unemployment %>% filter(year == 2009 & division %in% c('East North Central') & month==1),

aes(x = '2009', y = unemployment, label = paste(unemployment, '%')),

nudge_x = .10) +

scale_color_viridis_d(guide = 'none', option = 'D') +

theme_bw() +

coord_cartesian(xlim = c(1.25,1.75))## Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

## ℹ Please use `linewidth` instead.

## This warning is displayed once every 8 hours.

## Call `lifecycle::last_lifecycle_warnings()` to see where this warning was

## generated.

Discrete probability

We start by covering some basic principles related to categorical data. The subset of probability is referred to as discrete probability. It will help us understand the probability theory we will later introduce for numeric and continuous data, which is much more common in data science applications. Discrete probability is more useful in card games and therefore we use these as examples.

Relative frequency

The word probability is used in everyday language. Answering questions about probability is often hard, if not impossible. Here we discuss a mathematical definition of probability that does permit us to give precise answers to certain questions.

For example, if I have 2 red beads and 3 blue beads inside an urn1 (most probability books use this archaic term, so we do too) and I pick one at random, what is the probability of picking a red one? Our intuition tells us that the answer is 2/5 or 40%. A precise definition can be given by noting that there are five possible outcomes of which two satisfy the condition necessary for the event “pick a red bead”. Since each of the five outcomes has the same chance of occurring, we conclude that the probability is .4 for red and .6 for blue.

A more tangible way to think about the probability of an event is as the proportion of times the event occurs when we repeat the experiment an infinite number of times, independently, and under the same conditions.

Notation

We use the notation

In data science applications, we will often deal with continuous variables. These events will often be things like “is this person taller than 6 feet”. In this case, we write events in a more mathematical form:

Probability distributions

If we know the relative frequency of the different categories, defining a distribution for categorical outcomes is relatively straightforward. We simply assign a probability to each category. In cases that can be thought of as beads in an urn, for each bead type, their proportion defines the distribution.

If we are randomly calling likely voters from a population that is 44% Democrat, 44% Republican, 10% undecided, and 2% Green Party, these proportions define the probability for each group. The probability distribution is:

| Pr(picking a Republican) | = | 0.44 |

| Pr(picking a Democrat) | = | 0.44 |

| Pr(picking an undecided) | = | 0.10 |

| Pr(picking a Green) | = | 0.02 |

Monte Carlo simulations for categorical data

Computers provide a way to actually perform the simple random experiment described above: pick a bead at random from a bag that contains three blue beads and two red ones. Random number generators permit us to mimic the process of picking at random.

An example is the sample function in R. We demonstrate its use in the code below. First, we use the function rep to generate the urn:

beads <- rep(c("red", "blue"), times = c(2,3))

beads## [1] "red" "red" "blue" "blue" "blue"and then use sample to pick a bead at random:

sample(beads, 1)## [1] "blue"This line of code produces one random outcome. We want to repeat this experiment an infinite number of times, but it is impossible to repeat forever. Instead, we repeat the experiment a large enough number of times to make the results practically equivalent to repeating forever. This is an example of a Monte Carlo simulation.

Much of what mathematical and theoretical statisticians study, which we do not cover in this class, relates to providing rigorous definitions of “practically equivalent” as well as studying how close a large number of experiments gets us to what happens in the limit. Later in this lecture, we provide a practical approach to deciding what is “large enough”.

To perform our first Monte Carlo simulation, we use the replicate function, which permits us to repeat the same task any number of times. Here, we repeat the random event

B <- 10000

events <- replicate(B, sample(beads, 1))We can now see if our definition actually is in agreement with this Monte Carlo simulation approximation. We can use table to see the distribution:

tab <- table(events)

tab## events

## blue red

## 6068 3932and prop.table gives us the proportions:

prop.table(tab)## events

## blue red

## 0.6068 0.3932The numbers above are the estimated probabilities provided by this Monte Carlo simulation. Statistical theory, not covered here, tells us that as

Although this is a simple and not very useful example, we will use Monte Carlo simulations to estimate probabilities in cases in which it is harder to compute the exact ones. Before delving into more complex examples, we use simple ones to demonstrate the computing tools available in R.

Setting the random seed

Before we continue, we will briefly explain the following important line of code:

set.seed(1986)Throughout this class, we use random number generators. This implies that many of the results presented can actually change by chance, which then suggests that a frozen version of the class may show a different result than what you obtain when you try to code as shown in the class. This is actually fine since the results are random and change from time to time. However, if you want to ensure that results are exactly the same every time you run them, you can set R’s random number generation seed to a specific number. Above we set it to 1986. We want to avoid using the same seed everytime. A popular way to pick the seed is the year - month - day. For example, we picked 1986 on December 20, 2018:

You can learn more about setting the seed by looking at the documentation:

?set.seedIn the exercises, we may ask you to set the seed to assure that the results you obtain are exactly what we expect them to be.

With and without replacement

The function sample has an argument that permits us to pick more than one element from the urn. However, by default, this selection occurs without replacement: after a bead is selected, it is not put back in the bag. Notice what happens when we ask to randomly select five beads:

sample(beads, 5)## [1] "red" "blue" "blue" "blue" "red"sample(beads, 5)## [1] "red" "red" "blue" "blue" "blue"sample(beads, 5)## [1] "blue" "red" "blue" "red" "blue"This results in rearrangements that always have three blue and two red beads. If we ask that six beads be selected, we get an error:

sample(beads, 6)Error in sample.int(length(x), size, replace, prob) : cannot take a sample larger than the population when 'replace = FALSE'

However, the sample function can be used directly, without the use of replicate, to repeat the same experiment of picking 1 out of the 5 beads, continually, under the same conditions. To do this, we sample with replacement: return the bead back to the urn after selecting it.

We can tell sample to do this by changing the replace argument, which defaults to FALSE, to replace = TRUE:

events <- sample(beads, B, replace = TRUE)

prop.table(table(events))## events

## blue red

## 0.6017 0.3983Not surprisingly, we get results very similar to those previously obtained with replicate.

Independence

We say two events are independent if the outcome of one does not affect the other. The classic example is coin tosses. Every time we toss a fair coin, the probability of seeing heads is 1/2 regardless of what previous tosses have revealed. The same is true when we pick beads from an urn with replacement. In the example above, the probability of red is 0.40 regardless of previous draws.

Many examples of events that are not independent come from card games. When we deal the first card, the probability of getting a King is 1/13 since there are thirteen possibilities: Ace, Deuce, Three,

To see an extreme case of non-independent events, consider our example of drawing five beads at random without replacement:

x <- sample(beads, 5)If you have to guess the color of the first bead, you will predict blue since blue has a 60% chance. But if I show you the result of the last four outcomes:

x[2:5]## [1] "blue" "blue" "blue" "red"would you still guess blue? Of course not. Now you know that the probability of red is 1 since the only bead left is red. The events are not independent, so the probabilities change.

Conditional probabilities

When events are not independent, conditional probabilities are useful. We already saw an example of a conditional probability: we computed the probability that a second dealt card is a King given that the first was a King. In probability, we use the following notation:

We use the

When two events, say

This is the mathematical way of saying: the fact that

Addition and multiplication rules

Multiplication rule

If we want to know the probability of two events, say

So, in a Blackjack game, to calculate the chances of getting a 21 by drawing an Ace and then a face card, we compute the probability of the first being an Ace and multiply by the probability of drawing a face card or a 10 given that the first was an Ace:

The multiplication rule also applies to more than two events. We can use induction to expand for more events:

Multiplication rule under independence

When we have independent events, then the multiplication rule becomes simpler:

But we have to be very careful before using this since assuming independence can result in very different and incorrect probability calculations when we don’t actually have independence.

As an example, imagine a court case in which the suspect was described as having a mustache and a beard. The defendant has a mustache and a beard and the prosecution brings in an “expert” to testify that 1/10 men have beards and 1/5 have mustaches, so using the multiplication rule we conclude that only

But to multiply like this we need to assume independence! Say the conditional probability of a man having a mustache conditional on him having a beard is .95. So the correct calculation probability is much higher:

The multiplication rule also gives us a general formula for computing conditional probabilities:

To illustrate how we use these formulas and concepts in practice, we will use several examples related to card games.

Combinations and permutations

In our very first example, we imagined an urn with five beads. As a reminder, to compute the probability distribution of one draw, we simply listed out all the possibilities. There were 5 and so then, for each event, we counted how many of these possibilities were associated with the event. The resulting probability of choosing a blue bead is 3/5 because out of the five possible outcomes, three were blue.

For more complicated cases, the computations are not as straightforward. For instance, what is the probability that if I draw five cards without replacement, I get all cards of the same suit, what is known as a “flush” in poker? In a discrete probability course you learn theory on how to make these computations. Here we focus on how to use R code to compute the answers.

First, let’s construct a deck of cards. For this, we will use the expand.grid and paste functions. We use paste to create strings by joining smaller strings. To do this, we take the number and suit of a card and create the card name like this:

number <- "Three"

suit <- "Hearts"

paste(number, suit)## [1] "Three Hearts"paste also works on pairs of vectors performing the operation element-wise:

paste(letters[1:5], as.character(1:5))## [1] "a 1" "b 2" "c 3" "d 4" "e 5"The function expand.grid gives us all the combinations of entries of two vectors. For example, if you have blue and black pants and white, grey, and plaid shirts, all your combinations are:

expand.grid(pants = c("blue", "black"), shirt = c("white", "grey", "plaid"))## pants shirt

## 1 blue white

## 2 black white

## 3 blue grey

## 4 black grey

## 5 blue plaid

## 6 black plaidHere is how we generate a deck of cards:

suits <- c("Diamonds", "Clubs", "Hearts", "Spades")

numbers <- c("Ace", "Deuce", "Three", "Four", "Five", "Six", "Seven",

"Eight", "Nine", "Ten", "Jack", "Queen", "King")

deck <- expand.grid(number=numbers, suit=suits)

deck <- paste(deck$number, deck$suit)With the deck constructed, we can double check that the probability of a King in the first card is 1/13 by computing the proportion of possible outcomes that satisfy our condition:

kings <- paste("King", suits)

mean(deck %in% kings)## [1] 0.07692308Now, how about the conditional probability of the second card being a King given that the first was a King? Earlier, we deduced that if one King is already out of the deck and there are 51 left, then this probability is 3/51. Let’s confirm by listing out all possible outcomes.

To do this, we can use the permutations function from the gtools package. For any list of size n, this function computes all the different combinations we can get when we select r items. Here are all the ways we can choose two numbers from a list consisting of 1,2,3:

library(gtools)

permutations(3, 2)## [,1] [,2]

## [1,] 1 2

## [2,] 1 3

## [3,] 2 1

## [4,] 2 3

## [5,] 3 1

## [6,] 3 2Notice that the order matters here: 3,1 is different than 1,3. Also, note that (1,1), (2,2), and (3,3) do not appear because once we pick a number, it can’t appear again.

Optionally, we can add a vector. If you want to see five random seven digit phone numbers out of all possible phone numbers (without repeats), you can type:

all_phone_numbers <- permutations(10, 7, v = 0:9)

n <- nrow(all_phone_numbers)

index <- sample(n, 5)

all_phone_numbers[index,]## [,1] [,2] [,3] [,4] [,5] [,6] [,7]

## [1,] 1 3 8 0 6 7 5

## [2,] 2 9 1 6 4 8 0

## [3,] 5 1 6 0 9 8 2

## [4,] 7 4 6 0 2 8 1

## [5,] 4 6 5 9 2 8 0Instead of using the numbers 1 through 10, the default, it uses what we provided through v: the digits 0 through 9.

To compute all possible ways we can choose two cards when the order matters, we type:

hands <- permutations(52, 2, v = deck)This is a matrix with two columns and 2652 rows. Here’s what it looks like:

head(hands)## [,1] [,2]

## [1,] "Ace Clubs" "Ace Diamonds"

## [2,] "Ace Clubs" "Ace Hearts"

## [3,] "Ace Clubs" "Ace Spades"

## [4,] "Ace Clubs" "Deuce Clubs"

## [5,] "Ace Clubs" "Deuce Diamonds"

## [6,] "Ace Clubs" "Deuce Hearts"With a matrix we can get the first and second cards like this:

first_card <- hands[,1]

second_card <- hands[,2]Now the cases for which the first hand was a King can be computed like this:

kings <- paste("King", suits)

sum(first_card %in% kings)## [1] 204To get the conditional probability, we compute what fraction of these have a King in the second card:

sum(first_card%in%kings & second_card%in%kings) / sum(first_card%in%kings)## [1] 0.05882353which is exactly 3/51, as we had already deduced. Notice that the code above is equivalent to:

mean(first_card%in%kings & second_card%in%kings) / mean(first_card%in%kings)## [1] 0.05882353which uses mean instead of sum and is an R version of:

How about if the order doesn’t matter? For example, in Blackjack if you get an Ace and a face card in the first draw, it is called a Natural 21 and you win automatically. If we wanted to compute the probability of this happening, we would enumerate the combinations, not the permutations, since the order does not matter.

combinations(3,2)## [,1] [,2]

## [1,] 1 2

## [2,] 1 3

## [3,] 2 3In the second line, the outcome does not include (2,1) because (1,2) already was enumerated. The same applies to (3,1) and (3,2).

So to compute the probability of a Natural 21 in Blackjack, we can do this:

aces <- paste("Ace", suits)

facecard <- c("King", "Queen", "Jack", "Ten")

facecard <- expand.grid(number = facecard, suit = suits)

facecard <- paste(facecard$number, facecard$suit)

hands <- combinations(52, 2, v = deck)

mean(hands[,1] %in% aces & hands[,2] %in% facecard)## [1] 0.04826546In the last line, we assume the Ace comes first. This is only because we know the way combination enumerates possibilities and it will list this case first. But to be safe, we could have written this and produced the same answer:

mean((hands[,1] %in% aces & hands[,2] %in% facecard) |

(hands[,2] %in% aces & hands[,1] %in% facecard))## [1] 0.04826546Monte Carlo example

Instead of using combinations to deduce the exact probability of a Natural 21, we can use a Monte Carlo to estimate this probability. In this case, we draw two cards over and over and keep track of how many 21s we get. We can use the function sample to draw two cards without replacements:

hand <- sample(deck, 2)

hand## [1] "Queen Clubs" "Seven Spades"And then check if one card is an Ace and the other a face card or a 10. Going forward, we include 10 when we say face card. Now we need to check both possibilities:

(hand[1] %in% aces & hand[2] %in% facecard) |

(hand[2] %in% aces & hand[1] %in% facecard)## [1] FALSEIf we repeat this 10,000 times, we get a very good approximation of the probability of a Natural 21.

Let’s start by writing a function that draws a hand and returns TRUE if we get a 21. The function does not need any arguments because it uses objects defined in the global environment.

blackjack <- function(){

hand <- sample(deck, 2)

(hand[1] %in% aces & hand[2] %in% facecard) |

(hand[2] %in% aces & hand[1] %in% facecard)

}Here we do have to check both possibilities: Ace first or Ace second because we are not using the combinations function. The function returns TRUE if we get a 21 and FALSE otherwise:

blackjack()## [1] FALSENow we can play this game, say, 10,000 times:

B <- 10000

results <- replicate(B, blackjack())

mean(results)## [1] 0.0475Examples

In this section, we describe two discrete probability popular examples: the Monty Hall problem and the birthday problem. We use R to help illustrate the mathematical concepts.

Monty Hall problem

In the 1970s, there was a game show called “Let’s Make a Deal” and Monty Hall was the host. At some point in the game, contestants were asked to pick one of three doors. Behind one door there was a prize. The other doors had a goat behind them to show the contestant they had lost. After the contestant picked a door, before revealing whether the chosen door contained a prize, Monty Hall would open one of the two remaining doors and show the contestant there was no prize behind that door. Then he would ask “Do you want to switch doors?” What would you do?

We can use probability to show that if you stick with the original door choice, your chances of winning a prize remain 1 in 3. However, if you switch to the other door, your chances of winning double to 2 in 3! This seems counterintuitive. Many people incorrectly think both chances are 1 in 2 since you are choosing between 2 options. You can watch a detailed mathematical explanation on Khan Academy2 or read one on Wikipedia3. Below we use a Monte Carlo simulation to see which strategy is better. Note that this code is written longer than it should be for pedagogical purposes.

Let’s start with the stick strategy:

B <- 10000

monty_hall <- function(strategy){

doors <- as.character(1:3)

prize <- sample(c("car", "goat", "goat")) # no replacement

prize_door <- doors[prize == "car"] # which door has the prize?

my_pick <- sample(doors, 1) # we pick a door at random

show <- sample(doors[!doors %in% c(my_pick, prize_door)],1)

stick <- my_pick

switch <- doors[!doors%in%c(my_pick, show)]

choice <- ifelse(strategy == "stick", stick, switch) # apply the strategy

choice == prize_door # did we win?

}

stick <- replicate(B, monty_hall("stick"))

mean(stick)## [1] 0.3416switch <- replicate(B, monty_hall("switch"))

mean(switch)## [1] 0.6682As we write the code, we note that the lines starting with my_pick and show have no influence on the last logical operation when we stick to our original choice anyway. From this we should realize that the chance is 1 in 3, what we began with. When we switch,

the Monte Carlo estimate confirms the 2/3 calculation. This helps us gain some insight by showing that we are removing a door, show, that is definitely not a winner from our choices. We also see that unless we get it right when we first pick, you win: 1 - 1/3 = 2/3.

Birthday problem

Suppose you are in a classroom with 50 people. If we assume this is a randomly selected group of 50 people, what is the chance that at least two people have the same birthday? Although it is somewhat advanced, we can deduce this mathematically. We will do this later. Here we use a Monte Carlo simulation. For simplicity, we assume nobody was born on February 29. This actually doesn’t change the answer much.

First, note that birthdays can be represented as numbers between 1 and 365, so a sample of 50 birthdays can be obtained like this:

n <- 50

bdays <- sample(1:365, n, replace = TRUE)To check if in this particular set of 50 people we have at least two with the same birthday, we can use the function duplicated, which returns TRUE whenever an element of a vector is a duplicate. Here is an example:

duplicated(c(1,2,3,1,4,3,5))## [1] FALSE FALSE FALSE TRUE FALSE TRUE FALSEThe second time 1 and 3 appear, we get a TRUE. So to check if two birthdays were the same, we simply use the any and duplicated functions like this:

any(duplicated(bdays))## [1] TRUEIn this case, we see that it did happen. At least two people had the same birthday.

To estimate the probability of a shared birthday in the group, we repeat this experiment by sampling sets of 50 birthdays over and over:

B <- 10000

same_birthday <- function(n){

bdays <- sample(1:365, n, replace=TRUE)

any(duplicated(bdays))

}

results <- replicate(B, same_birthday(50))

mean(results)## [1] 0.9691Were you expecting the probability to be this high?

People tend to underestimate these probabilities. To get an intuition as to why it is so high, think about what happens when the group size is close to 365. At this stage, we run out of days and the probability is one.

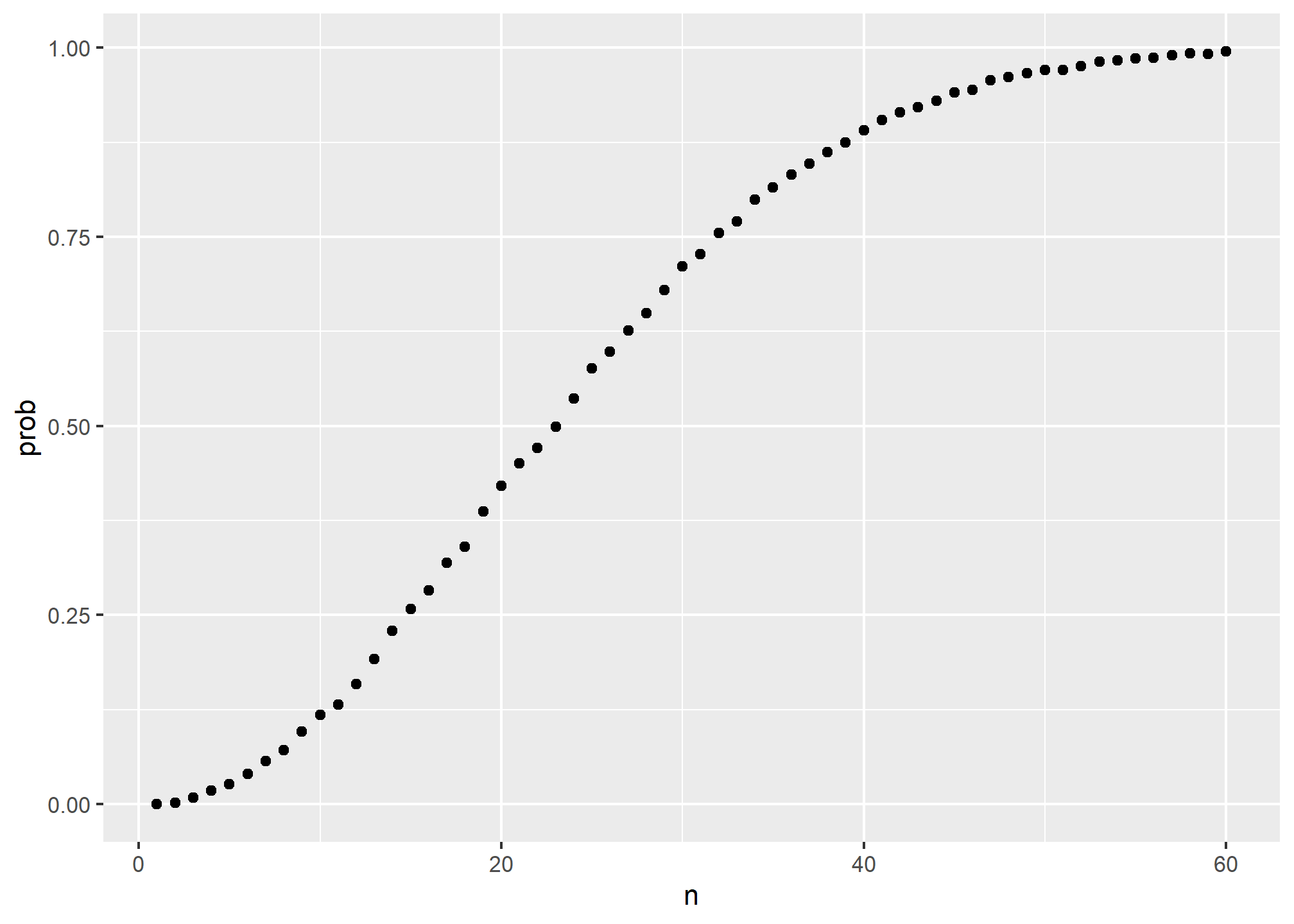

Say we want to use this knowledge to bet with friends about two people having the same birthday in a group of people. When are the chances larger than 50%? Larger than 75%?

Let’s create a look-up table. We can quickly create a function to compute this for any group size:

compute_prob <- function(n, B=10000){

results <- replicate(B, same_birthday(n))

mean(results)

}Using the function sapply, we can perform element-wise operations on any function:

n <- seq(1,60)

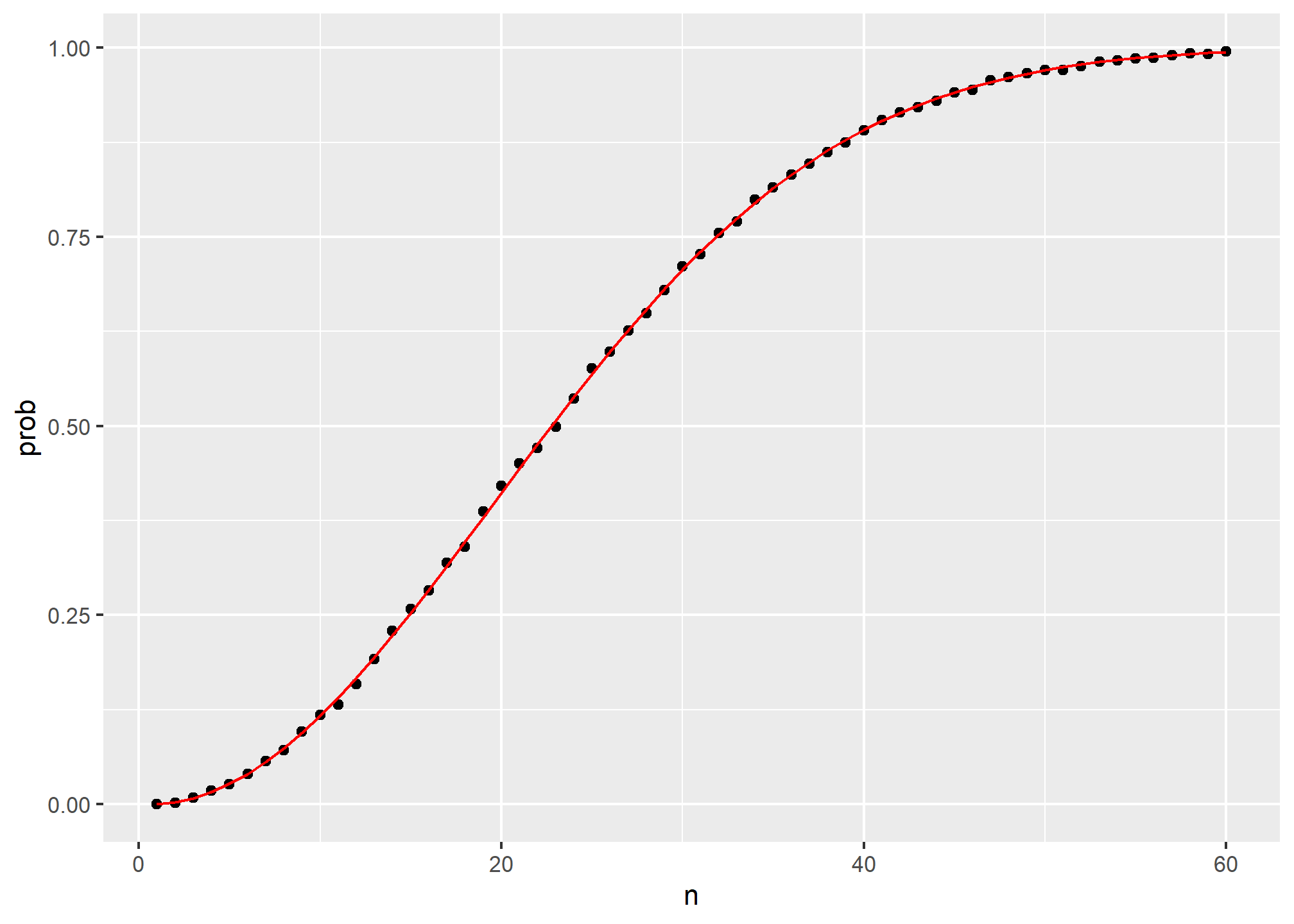

prob <- sapply(n, compute_prob)We can now make a plot of the estimated probabilities of two people having the same birthday in a group of size

library(tidyverse)

prob <- sapply(n, compute_prob)

qplot(n, prob)

Now let’s compute the exact probabilities rather than use Monte Carlo approximations. Not only do we get the exact answer using math, but the computations are much faster since we don’t have to generate experiments.

To make the math simpler, instead of computing the probability of it happening, we will compute the probability of it not happening. For this, we use the multiplication rule.

Let’s start with the first person. The probability that person 1 has a unique birthday is 1. The probability that person 2 has a unique birthday, given that person 1 already took one, is 364/365. Then, given that the first two people have unique birthdays, person 3 is left with 363 days to choose from. We continue this way and find the chances of all 50 people having a unique birthday is:

We can write a function that does this for any number:

exact_prob <- function(n){

prob_unique <- seq(365,365-n+1)/365

1 - prod( prob_unique)

}

eprob <- sapply(n, exact_prob)

qplot(n, prob) + geom_line(aes(n, eprob), col = "red")

This plot shows that the Monte Carlo simulation provided a very good estimate of the exact probability. Had it not been possible to compute the exact probabilities, we would have still been able to accurately estimate the probabilities.

Infinity in practice

The theory described here requires repeating experiments over and over forever. In practice we can’t do this.

In the examples above, we used

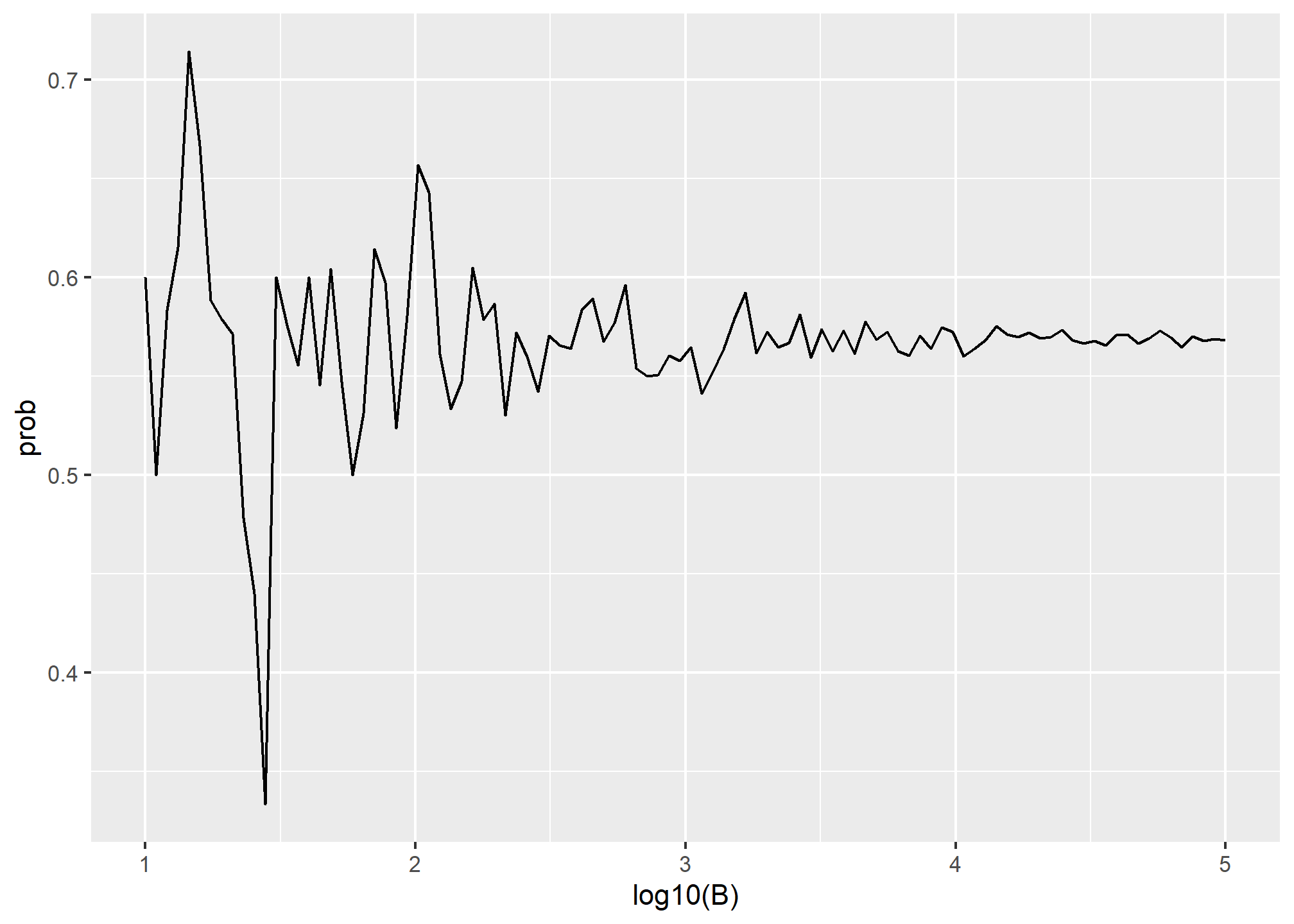

One practical approach we will describe here is to check for the stability of the estimate. The following is an example with the birthday problem for a group of 25 people.

B <- 10^seq(1, 5, len = 100)

compute_prob <- function(B, n=25){

same_day <- replicate(B, same_birthday(n))

mean(same_day)

}

prob <- sapply(B, compute_prob)

qplot(log10(B), prob, geom = "line")

In this plot, we can see that the values start to stabilize (that is, they vary less than .01) around 1000. Note that the exact probability, which we know in this case, is 0.5686997.

TRY IT

One ball will be drawn at random from a box containing: 3 cyan balls, 5 magenta balls, and 7 yellow balls. What is the probability that the ball will be cyan?

What is the probability that the ball will not be cyan?

Instead of taking just one draw, consider taking two draws. You take the second draw without returning the first draw to the box. We call this sampling without replacement. What is the probability that the first draw is cyan and that the second draw is not cyan?

Now repeat the experiment, but this time, after taking the first draw and recording the color, return it to the box and shake the box. We call this sampling with replacement. What is the probability that the first draw is cyan and that the second draw is not cyan?

Two events

- You don’t replace the draw.

- You replace the draw.

- Neither

- Both

Say you’ve drawn 5 balls from the box, with replacement, and all have been yellow. What is the probability that the next one is yellow?

If you roll a 6-sided die six times, what is the probability of not seeing a 6?

Two teams, say the Celtics and the Cavs, are playing a seven game series. The Cavs are a better team and have a 60% chance of winning each game. What is the probability that the Celtics win at least one game?

Create a Monte Carlo simulation to confirm your answer to the previous problem. Use

B <- 10000simulations. Hint: use the following code to generate the results of the first four games:

celtic_wins <- sample(c(0,1), 4, replace = TRUE, prob = c(0.6, 0.4))The Celtics must win one of these 4 games.

Two teams, say the Cavs and the Warriors, are playing a seven game championship series. The first to win four games, therefore, wins the series. The teams are equally good so they each have a 50-50 chance of winning each game. If the Cavs lose the first game, what is the probability that they win the series?

Confirm the results of the previous question with a Monte Carlo simulation.

Two teams,

prob_win <- function(p){

B <- 10000

result <- replicate(B, {

b_win <- sample(c(1,0), 7, replace = TRUE, prob = c(1-p, p))

sum(b_win)>=4

})

mean(result)

}Use the function sapply to compute the probability, call it Pr, of winning for p <- seq(0.5, 0.95, 0.025). Then plot the result.

- Repeat the exercise above, but now keep the probability fixed at

p <- 0.75and compute the probability for different series lengths: best of 1 game, 3 games, 5 games,… Specifically,N <- seq(1, 25, 2). Hint: use this function:

prob_win <- function(N, p=0.75){

B <- 10000

result <- replicate(B, {

b_win <- sample(c(1,0), N, replace = TRUE, prob = c(1-p, p))

sum(b_win)>=(N+1)/2

})

mean(result)

}In previous lectures, we explained why when summarizing a list of numeric values, such as heights, it is not useful to construct a distribution that defines a proportion to each possible outcome. For example, if we measure every single person in a very large population of size

Just as when using distributions to summarize numeric data, it is much more practical to define a function that operates on intervals rather than single values. The standard way of doing this is using the cumulative distribution function (CDF).

We described empirical cumulative distribution function (eCDF) as a basic summary of a list of numeric values. As an example, we earlier defined the height distribution for adult male students. Here, we define the vector

library(tidyverse)

library(dslabs)

data(heights)

x <- heights %>% filter(sex=="Male") %>% pull(height)We defined the empirical distribution function as:

F <- function(a) mean(x<=a)which, for any value a, gives the proportion of values in the list x that are smaller or equal than a.

Keep in mind that we have not yet introduced probability in the context of CDFs. Let’s do this by asking the following: if I pick one of the male students at random, what is the chance that he is taller than 70.5 inches? Because every student has the same chance of being picked, the answer to this is equivalent to the proportion of students that are taller than 70.5 inches. Using the CDF we obtain an answer by typing:

1 - F(70)## [1] 0.3768473Once a CDF is defined, we can use this to compute the probability of any subset. For instance, the probability of a student being between height a and height b is:

F(b)-F(a)Because we can compute the probability for any possible event this way, the cumulative probability function defines the probability distribution for picking a height at random from our vector of heights x.

Theoretical continuous distributions

The normal distribution is a useful approximation to many naturally occurring distributions, including that of height. The cumulative distribution for the normal distribution is defined by a mathematical formula which in R can be obtained with the function pnorm. We say that a random quantity is normally distributed with average m and standard deviation s if its probability distribution is defined by:

F(a) = pnorm(a, m, s)This is useful because if we are willing to use the normal approximation for, say, height, we don’t need the entire dataset to answer questions such as: what is the probability that a randomly selected student is taller then 70 inches? We just need the average height and standard deviation:

m <- mean(x)

s <- sd(x)

1 - pnorm(70.5, m, s)## [1] 0.371369Theoretical distributions as approximations

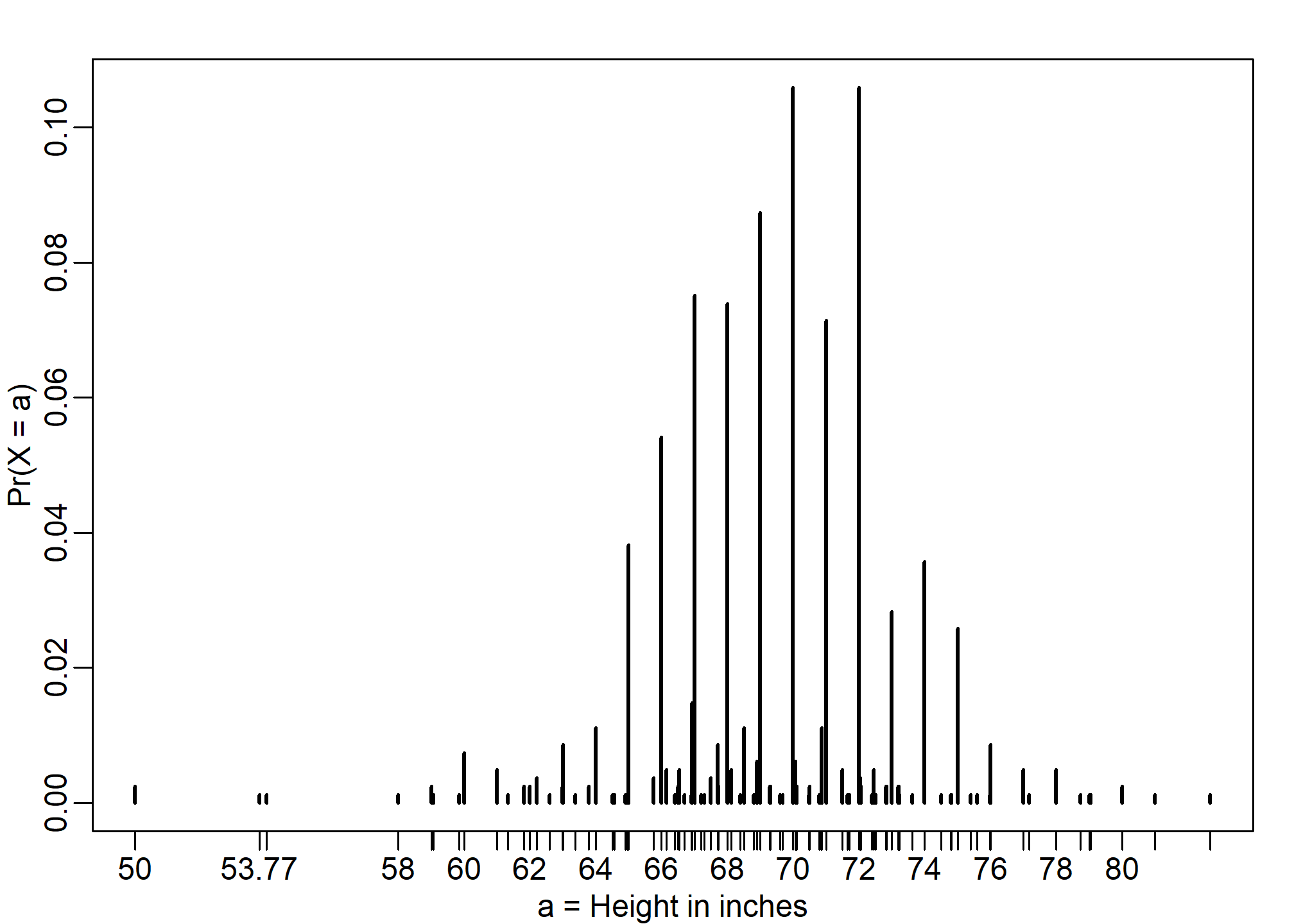

The normal distribution is derived mathematically: we do not need data to define it. For practicing data scientists, almost everything we do involves data. Data is always, technically speaking, discrete. For example, we could consider our height data categorical with each specific height a unique category. The probability distribution is defined by the proportion of students reporting each height. Here is a plot of that probability distribution:

While most students rounded up their heights to the nearest inch, others reported values with more precision. One student reported his height to be 69.6850393700787, which is 177 centimeters. The probability assigned to this height is 0.0012315 or 1 in 812. The probability for 70 inches is much higher at 0.1059113, but does it really make sense to think of the probability of being exactly 70 inches as being different than 69.6850393700787? Clearly it is much more useful for data analytic purposes to treat this outcome as a continuous numeric variable, keeping in mind that very few people, or perhaps none, are exactly 70 inches, and that the reason we get more values at 70 is because people round to the nearest inch.

With continuous distributions, the probability of a singular value is not even defined. For example, it does not make sense to ask what is the probability that a normally distributed value is 70. Instead, we define probabilities for intervals. We thus could ask what is the probability that someone is between 69.5 and 70.5.

In cases like height, in which the data is rounded, the normal approximation is particularly useful if we deal with intervals that include exactly one round number. For example, the normal distribution is useful for approximating the proportion of students reporting values in intervals like the following three:

mean(x <= 68.5) - mean(x <= 67.5)## [1] 0.114532mean(x <= 69.5) - mean(x <= 68.5)## [1] 0.1194581mean(x <= 70.5) - mean(x <= 69.5)## [1] 0.1219212Note how close we get with the normal approximation:

pnorm(68.5, m, s) - pnorm(67.5, m, s)## [1] 0.1031077pnorm(69.5, m, s) - pnorm(68.5, m, s)## [1] 0.1097121pnorm(70.5, m, s) - pnorm(69.5, m, s)## [1] 0.1081743However, the approximation is not as useful for other intervals. For instance, notice how the approximation breaks down when we try to estimate:

mean(x <= 70.9) - mean(x<=70.1)## [1] 0.02216749with

pnorm(70.9, m, s) - pnorm(70.1, m, s)## [1] 0.08359562In general, we call this situation discretization. Although the true height distribution is continuous, the reported heights tend to be more common at discrete values, in this case, due to rounding. As long as we are aware of how to deal with this reality, the normal approximation can still be a very useful tool.

The probability density

For categorical distributions, we can define the probability of a category. For example, a roll of a die, let’s call it

The CDF can then easily be defined:

Although for continuous distributions the probability of a single value

For those that know calculus, remember that the integral is related to a sum: it is the sum of bars with widths approximating 0. If you don’t know calculus, you can think of

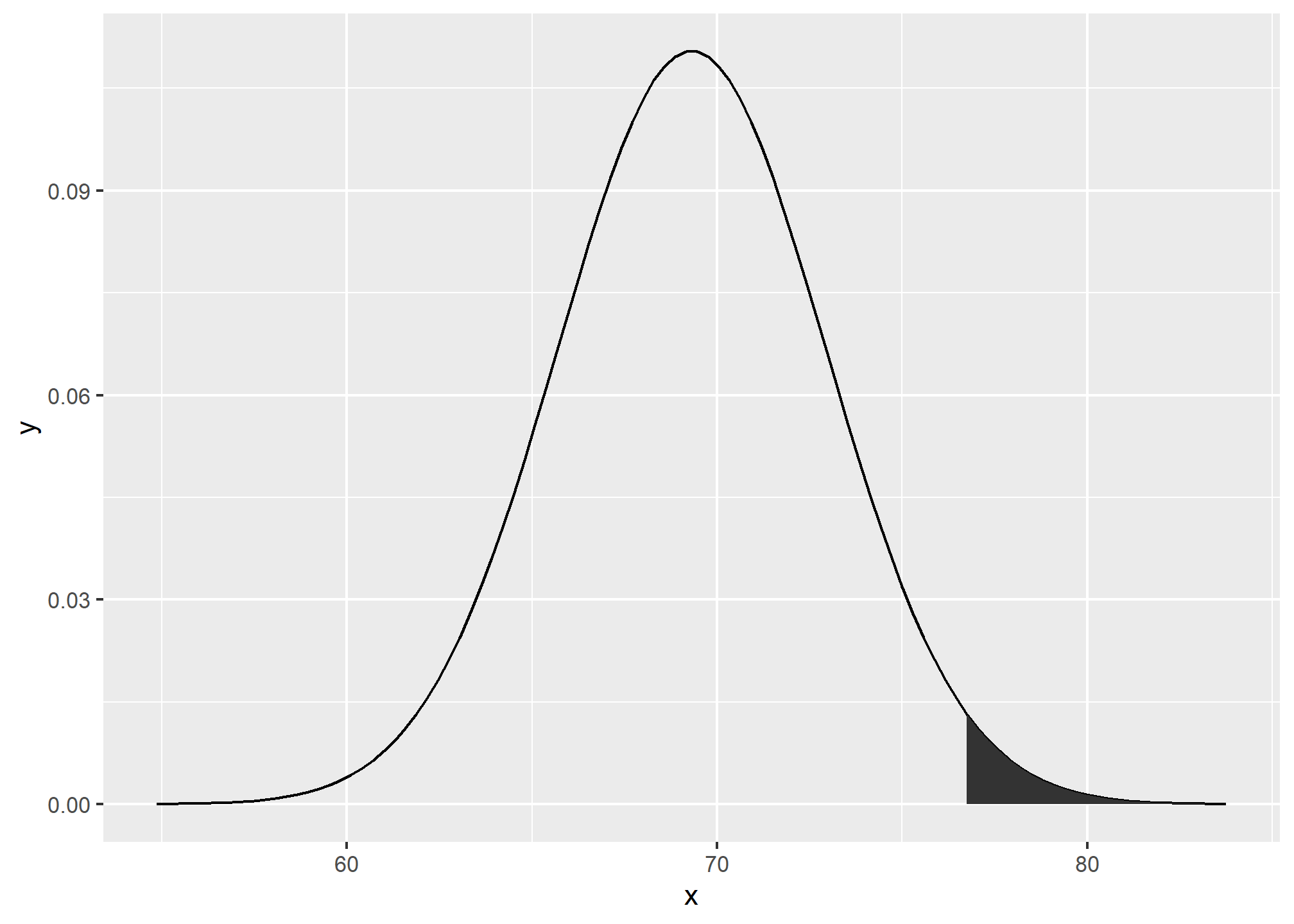

For example, to use the normal approximation to estimate the probability of someone being taller than 76 inches, we use:

1 - pnorm(76, m, s)## [1] 0.03206008which mathematically is the grey area below:

The curve you see is the probability density for the normal distribution. In R, we get this using the function dnorm.

Although it may not be immediately obvious why knowing about probability densities is useful, understanding this concept will be essential to those wanting to fit models to data for which predefined functions are not available.

Monte Carlo simulations for continuous variables

R provides functions to generate normally distributed outcomes. Specifically, the rnorm function takes three arguments: size, average (defaults to 0), and standard deviation (defaults to 1) and produces random numbers. Here is an example of how we could generate data that looks like our reported heights:

n <- length(x)

m <- mean(x)

s <- sd(x)

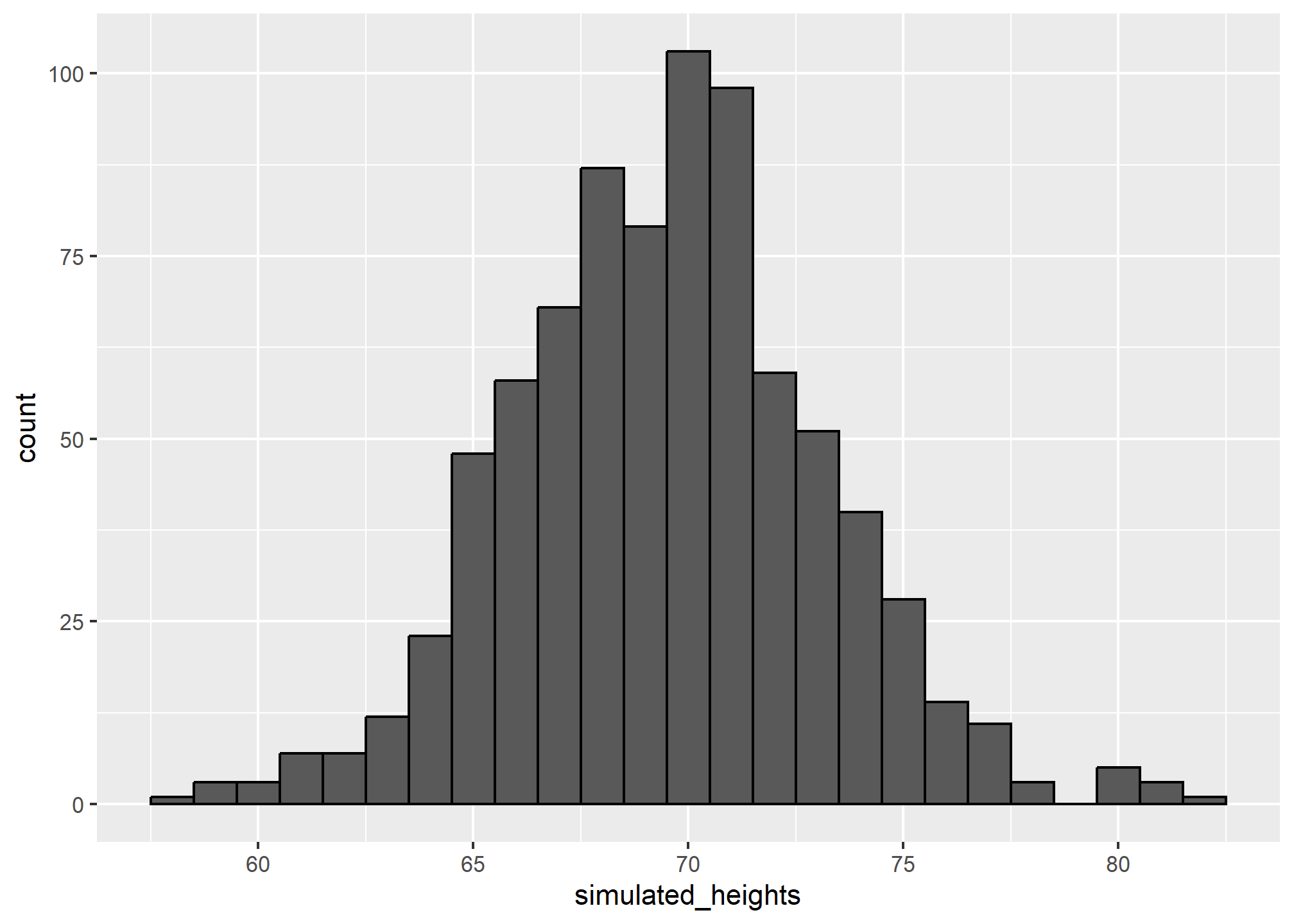

simulated_heights <- rnorm(n, m, s)Not surprisingly, the distribution looks normal:

This is one of the most useful functions in R as it will permit us to generate data that mimics natural events and answers questions related to what could happen by chance by running Monte Carlo simulations.

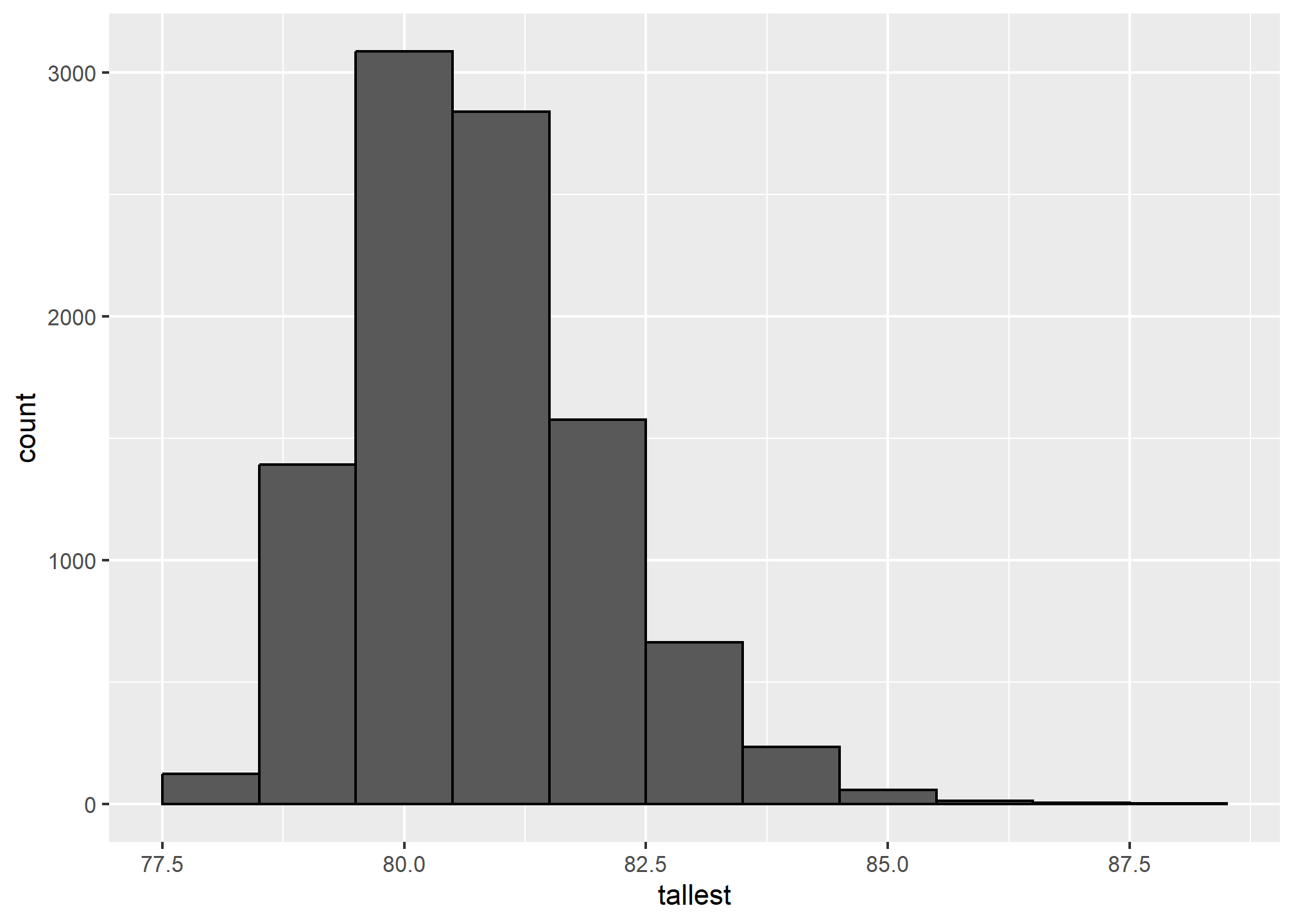

If, for example, we pick 800 males at random, what is the distribution of the tallest person? How rare is a seven footer in a group of 800 males? The following Monte Carlo simulation helps us answer that question:

B <- 10000

tallest <- replicate(B, {

simulated_data <- rnorm(800, m, s)

max(simulated_data)

})Having a seven footer is quite rare:

mean(tallest >= 7*12)## [1] 0.0172Here is the resulting distribution:

Note that it does not look normal.

Continuous distributions

The normal distribution is not the only useful theoretical distribution. Other continuous distributions that we may encounter are the student-t, Chi-square, exponential, gamma, beta, and beta-binomial. R provides functions to compute the density, the quantiles, the cumulative distribution functions and to generate Monte Carlo simulations. R uses a convention that lets us remember the names, namely using the letters d, q, p, and r in front of a shorthand for the distribution. We have already seen the functions dnorm, pnorm, and rnorm for the normal distribution. Remember that dnorm gives us the PDF, pnorm gives us the CDF, rnorm gives us random draws from the normal, and the function qnorm gives us the quantiles (the input to qnorm, then, must be between 0 and 1).

qnorm(.25, mean = 0, sd = 1)## [1] -0.6744898qnorm(.25, mean = -10, sd = 5)## [1] -13.37245qnorm(.975, mean = 0, sd = 1)## [1] 1.959964TRY IT

Assume the distribution of female heights is approximated by a normal distribution with a mean of 64 inches and a standard deviation of 3 inches. If we pick a female at random, what is the probability that she is 5 feet or shorter?

Assume the distribution of female heights is approximated by a normal distribution with a mean of 64 inches and a standard deviation of 3 inches. If we pick a female at random, what is the probability that she is 6 feet or taller?

Assume the distribution of female heights is approximated by a normal distribution with a mean of 64 inches and a standard deviation of 3 inches. If we pick a female at random, what is the probability that she is between 61 and 67 inches?

Repeat the exercise above, but convert everything to centimeters. That is, multiply every height, including the standard deviation, by 2.54. What is the answer now?

Notice that the answer to the question does not change when you change units. This makes sense since the answer to the question should not be affected by what units we use. In fact, if you look closely, you notice that 61 and 64 are both 1 SD away from the average. Compute the probability that a randomly picked, normally distributed random variable is within 1 SD from the average.

To see the math that explains why the answers to questions 3, 4, and 5 are the same, suppose we have a random variable with average

Now we subtract

The quantity on the left is a standard normal random variable. It has an average of 0 and a standard error of 1. We will call it

So, no matter the units, the probability of mu is the average and sigma the standard error, which of the following R code would give us the right answer in every situation:

mean(X<=a)pnorm((a - m)/s)pnorm((a - m)/s, m, s)pnorm(a)

Imagine the distribution of male adults is approximately normal with an expected value of 69 and a standard deviation of 3. How tall is the male in the 99th percentile? Hint: use

qnorm.The distribution of IQ scores is approximately normally distributed. The average is 100 and the standard deviation is 15. Suppose you want to know the distribution of the highest IQ across all graduating classes if 10,000 people are born each in your school district. Run a Monte Carlo simulation with

B=1000generating 10,000 IQ scores and keeping the highest. Make a histogram.

Random variables

In data science, we often deal with data that is affected by chance in some way: the data comes from a random sample, the data is affected by measurement error, or the data measures some outcome that is random in nature. Being able to quantify the uncertainty introduced by randomness is one of the most important jobs of a data analyst. Statistical inference offers a framework, as well as several practical tools, for doing this. The first step is to learn how to mathematically describe random variables.

In this section, we introduce random variables and their properties starting with their application to games of chance. We then describe some of the events surrounding the financial crisis of 2007-20084 using probability theory. This financial crisis was in part caused by underestimating the risk of certain securities5 sold by financial institutions. Specifically, the risks of mortgage-backed securities (MBS) and collateralized debt obligations (CDO) were grossly underestimated. These assets were sold at prices that assumed most homeowners would make their monthly payments, and the probability of this not occurring was calculated as being low. A combination of factors resulted in many more defaults than were expected, which led to a price crash of these securities. As a consequence, banks lost so much money that they needed government bailouts to avoid closing down completely.

Definition of Random variables

Random variables are numeric outcomes resulting from random processes. We can easily generate random variables using some of the simple examples we have shown. For example, define X to be 1 if a bead is blue and red otherwise:

beads <- rep( c("red", "blue"), times = c(2,3))

X <- ifelse(sample(beads, 1) == "blue", 1, 0)Here X is a random variable: every time we select a new bead the outcome changes randomly. See below:

ifelse(sample(beads, 1) == "blue", 1, 0)## [1] 1ifelse(sample(beads, 1) == "blue", 1, 0)## [1] 0ifelse(sample(beads, 1) == "blue", 1, 0)## [1] 0Sometimes it’s 1 and sometimes it’s 0.

Sampling models

Many data generation procedures, those that produce the data we study, can be modeled quite well as draws from an urn. For instance, we can model the process of polling likely voters as drawing 0s (Republicans) and 1s (Democrats) from an urn containing the 0 and 1 code for all likely voters. In epidemiological studies, we often assume that the subjects in our study are a random sample from the population of interest. The data related to a specific outcome can be modeled as a random sample from an urn containing the outcome for the entire population of interest. Similarly, in experimental research, we often assume that the individual organisms we are studying, for example worms, flies, or mice, are a random sample from a larger population. Randomized experiments can also be modeled by draws from an urn given the way individuals are assigned into groups: when getting assigned, you draw your group at random. Sampling models are therefore ubiquitous in data science. Casino games offer a plethora of examples of real-world situations in which sampling models are used to answer specific questions. We will therefore start with such examples.

Suppose a very small casino hires you to consult on whether they should set up roulette wheels. To keep the example simple, we will assume that 1,000 people will play and that the only game you can play on the roulette wheel is to bet on red or black. The casino wants you to predict how much money they will make or lose. They want a range of values and, in particular, they want to know what’s the chance of losing money. If this probability is too high, they will pass on installing roulette wheels.

We are going to define a random variable

color <- rep(c("Black", "Red", "Green"), c(18, 18, 2))The 1,000 outcomes from 1,000 people playing are independent draws from this urn. If red comes up, the gambler wins and the casino loses a dollar, so we draw a -1. Otherwise, the casino wins a dollar and we draw a 1. To construct our random variable

n <- 1000

X <- sample(ifelse(color == "Red", -1, 1), n, replace = TRUE)

X[1:10]## [1] -1 1 1 -1 -1 -1 1 1 1 1Because we know the proportions of 1s and -1s, we can generate the draws with one line of code, without defining color:

X <- sample(c(-1,1), n, replace = TRUE, prob=c(9/19, 10/19))We call this a sampling model since we are modeling the random behavior of roulette with the sampling of draws from an urn. The total winnings

X <- sample(c(-1,1), n, replace = TRUE, prob=c(9/19, 10/19))

S <- sum(X)

S## [1] 22The probability distribution of a random variable

If you run the code above, you see that

Note that if we can define a cumulative distribution function

We can estimate the distribution function for the random variable

n <- 1000

B <- 10000

roulette_winnings <- function(n){

X <- sample(c(-1,1), n, replace = TRUE, prob=c(9/19, 10/19))

sum(X)

}

S <- replicate(B, roulette_winnings(n))Now we can ask the following: in our simulations, how often did we get sums less than or equal to a?

mean(S <= a)This will be a very good approximation of

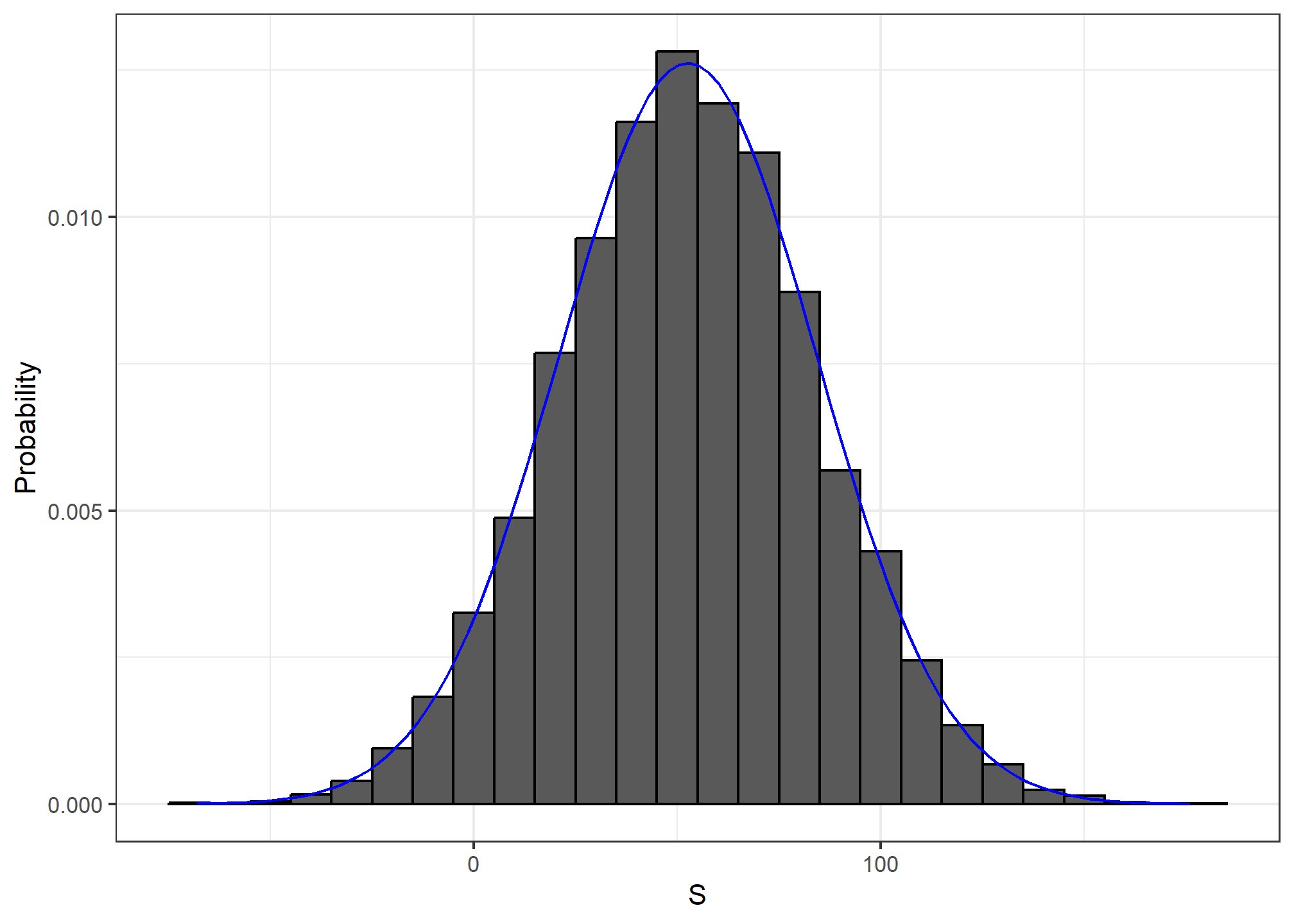

mean(S<0)## [1] 0.0456We can visualize the distribution of

## Warning: The dot-dot notation (`..density..`) was deprecated in ggplot2 3.4.0.

## ℹ Please use `after_stat(density)` instead.

## This warning is displayed once every 8 hours.

## Call `lifecycle::last_lifecycle_warnings()` to see where this warning was

## generated.

We see that the distribution appears to be approximately normal. A qq-plot will confirm that the normal approximation is close to a perfect approximation for this distribution. If, in fact, the distribution is normal, then all we need to define the distribution is the average and the standard deviation. Because we have the original values from which the distribution is created, we can easily compute these with mean(S) and sd(S). The blue curve you see added to the histogram above is a normal density with this average and standard deviation.

This average and this standard deviation have special names. They are referred to as the expected value and standard error of the random variable

Distributions versus probability distributions

Before we continue, let’s make an important distinction and connection between the distribution of a list of numbers and a probability distribution. In the visualization lectures, we described how any list of numbers x:

m <- sum(x)/length(x)

s <- sqrt(sum((x - m)^2) / length(x))A random variable

However, if

Another way to think about it that does not involve an urn is to run a Monte Carlo simulation and generate a very large list of outcomes of

Notation for random variables

In statistical textbooks, upper case letters are used to denote random variables and we follow this convention here. Lower case letters are used for observed values. You will see some notation that includes both. For example, you will see events defined as

The expected value and standard error

We have described sampling models for draws. We will now go over the mathematical theory that lets us approximate the probability distributions for the sum of draws. Once we do this, we will be able to help the casino predict how much money they will make. The same approach we use for the sum of draws will be useful for describing the distribution of averages and proportion which we will need to understand how polls work.

The first important concept to learn is the expected value.

In statistics books, it is common to use letter

to denote the expected value of the random variable

A random variable will vary around its expected value in a way that if you take the average of many, many draws, the average of the draws will approximate the expected value, getting closer and closer the more draws you take.

Theoretical statistics provides techniques that facilitate the calculation of expected values in different circumstances. For example, a useful formula tells us that the expected value of a random variable defined by one draw is the average of the numbers in the urn. In the urn used to model betting on red in roulette, we have 20 one dollars and 18 negative one dollars. The expected value is thus:

which is about 5 cents. It is a bit counterintuitive to say that

B <- 10^6

x <- sample(c(-1,1), B, replace = TRUE, prob=c(9/19, 10/19))

mean(x)## [1] 0.05169In general, if the urn has two possible outcomes, say

To see this, notice that if there are

Now the reason we define the expected value is because this mathematical definition turns out to be useful for approximating the probability distributions of sum, which then is useful for describing the distribution of averages and proportions. The first useful fact is that the expected value of the sum of the draws is:

So if 1,000 people play roulette, the casino expects to win, on average, about 1,000

to denote the standard error of a random variable.

If our draws are independent, then the standard error of the sum is given by the equation:

Using the definition of standard deviation, we can derive, with a bit of math, that if an urn contains two values

So in our roulette example, the standard deviation of the values inside the urn is:

2 * sqrt(90)/19## [1] 0.998614The standard error tells us the typical difference between a random variable and its expectation. Since one draw is obviously the sum of just one draw, we can use the formula above to calculate that the random variable defined by one draw has an expected value of 0.05 and a standard error of about 1. This makes sense since we either get 1 or -1, with 1 slightly favored over -1.

Using the formula above, the sum of 1,000 people playing has standard error of about $32:

n <- 1000

sqrt(n) * 2 * sqrt(90)/19## [1] 31.57895As a result, when 1,000 people bet on red, the casino is expected to win

Advanced note: Before continuing we should point out that exact probability calculations for the casino winnings can be performed with the binomial distribution. However, here we focus on the CLT, which can be generally applied to sums of random variables in a way that the binomial distribution can’t.

Population SD versus the sample SD

The standard deviation of a list x (below we use heights as an example) is defined as the square root of the average of the squared differences:

library(dslabs)

x <- heights$height

m <- mean(x)

s <- sqrt(mean((x-m)^2))Using mathematical notation we write:

However, be aware that the sd function returns a slightly different result:

identical(s, sd(x))## [1] FALSEs-sd(x)## [1] -0.001942661This is because the sd function R does not return the sd of the list, but rather uses a formula that estimates standard deviations of a population from a random sample

You can see that this is the case by typing:

n <- length(x)

s-sd(x)*sqrt((n-1) / n)## [1] 0For all the theory discussed here, you need to compute the actual standard deviation as defined:

sqrt(mean((x-m)^2))So be careful when using the sd function in R. However, keep in mind that throughout the book we sometimes use the sd function when we really want the actual SD. This is because when the list size is big, these two are practically equivalent since

Central Limit Theorem

The Central Limit Theorem (CLT) tells us that when the number of draws, also called the sample size, is large, the probability distribution of the sum of the independent draws is approximately normal. Because sampling models are used for so many data generation processes, the CLT is considered one of the most important mathematical insights in history.

Previously, we discussed that if we know that the distribution of a list of numbers is approximated by the normal distribution, all we need to describe the list are the average and standard deviation. We also know that the same applies to probability distributions. If a random variable has a probability distribution that is approximated with the normal distribution, then all we need to describe the probability distribution are the average and standard deviation, referred to as the expected value and standard error.

We previously ran this Monte Carlo simulation:

n <- 1000

B <- 10000

roulette_winnings <- function(n){

X <- sample(c(-1,1), n, replace = TRUE, prob=c(9/19, 10/19))

sum(X)

}

S <- replicate(B, roulette_winnings(n))The Central Limit Theorem (CLT) tells us that the sum

n * (20-18)/38## [1] 52.63158sqrt(n) * 2 * sqrt(90)/19## [1] 31.57895The theoretical values above match those obtained with the Monte Carlo simulation:

mean(S)## [1] 52.2242sd(S)## [1] 31.65508Using the CLT, we can skip the Monte Carlo simulation and instead compute the probability of the casino losing money using this approximation:

mu <- n * (20-18)/38

se <- sqrt(n) * 2 * sqrt(90)/19

pnorm(0, mu, se)## [1] 0.04779035which is also in very good agreement with our Monte Carlo result:

mean(S < 0)## [1] 0.0458How large is large in the Central Limit Theorem?

The CLT works when the number of draws is large. But large is a relative term. In many circumstances as few as 30 draws is enough to make the CLT useful. In some specific instances, as few as 10 is enough. However, these should not be considered general rules. Note, for example, that when the probability of success is very small, we need much larger sample sizes.

By way of illustration, let’s consider the lottery. In the lottery, the chances of winning are less than 1 in a million. Thousands of people play so the number of draws is very large. Yet the number of winners, the sum of the draws, range between 0 and 4. This sum is certainly not well approximated by a normal distribution, so the CLT does not apply, even with the very large sample size. This is generally true when the probability of a success is very low. In these cases, the Poisson distribution is more appropriate.

You can examine the properties of the Poisson distribution using dpois and ppois. You can generate random variables following this distribution with rpois. However, we do not cover the theory here. You can learn about the Poisson distribution in any probability textbook and even Wikipedia6

Statistical properties of averages

There are several useful mathematical results that we used above and often employ when working with data. We list them below.

1. The expected value of the sum of random variables is the sum of each random variable’s expected value. We can write it like this:

If the

which is another way of writing the result we show above for the sum of draws.

2. The expected value of a non-random constant times a random variable is the non-random constant times the expected value of a random variable. This is easier to explain with symbols:

To see why this is intuitive, consider change of units. If we change the units of a random variable, say from dollars to cents, the expectation should change in the same way. A consequence of the above two facts is that the expected value of the average of independent draws from the same urn is the expected value of the urn, call it

3. The square of the standard error of the sum of independent random variables is the sum of the square of the standard error of each random variable. This one is easier to understand in math form:

The square of the standard error is referred to as the variance in statistical textbooks. Note that this particular property is not as intuitive as the previous three and more in depth explanations can be found in statistics textbooks.

4. The standard error of a non-random constant times a random variable is the non-random constant times the random variable’s standard error. As with the expectation:

To see why this is intuitive, again think of units.

A consequence of 3 and 4 is that the standard error of the average of independent draws from the same urn is the standard deviation of the urn divided by the square root of

5. If

Note that statistical textbooks use the Greek letters

Law of large numbers

An important implication of the final result is that the standard error of the average becomes smaller and smaller as

Misinterpreting law of averages

The law of averages is sometimes misinterpreted. For example, if you toss a coin 5 times and see a head each time, you might hear someone argue that the next toss is probably a tail because of the law of averages: on average we should see 50% heads and 50% tails. A similar argument would be to say that red “is due” on the roulette wheel after seeing black come up five times in a row. These events are independent so the chance of a coin landing heads is 50% regardless of the previous 5. This is also the case for the roulette outcome. The law of averages applies only when the number of draws is very large and not in small samples. After a million tosses, you will definitely see about 50% heads regardless of the outcome of the first five tosses.

Another funny misuse of the law of averages is in sports when TV sportscasters predict a player is about to succeed because they have failed a few times in a row.

https://www.khanacademy.org/math/precalculus/prob-comb/dependent-events-precalc/v/monty-hall-problem↩︎

https://en.wikipedia.org/w/index.php?title=Financial_crisis_of_2007%E2%80%932008↩︎

https://en.wikipedia.org/w/index.php?title=Security_(finance)↩︎

https://en.wikipedia.org/w/index.php?title=Poisson_distribution↩︎